Sound Event Detection with Machine Learning

Jon Nordby Head of Data Science & Machine Learning Soundsensing AS jon@soundsensing.no EuroPython 2021

Introduction

About Soundsensing

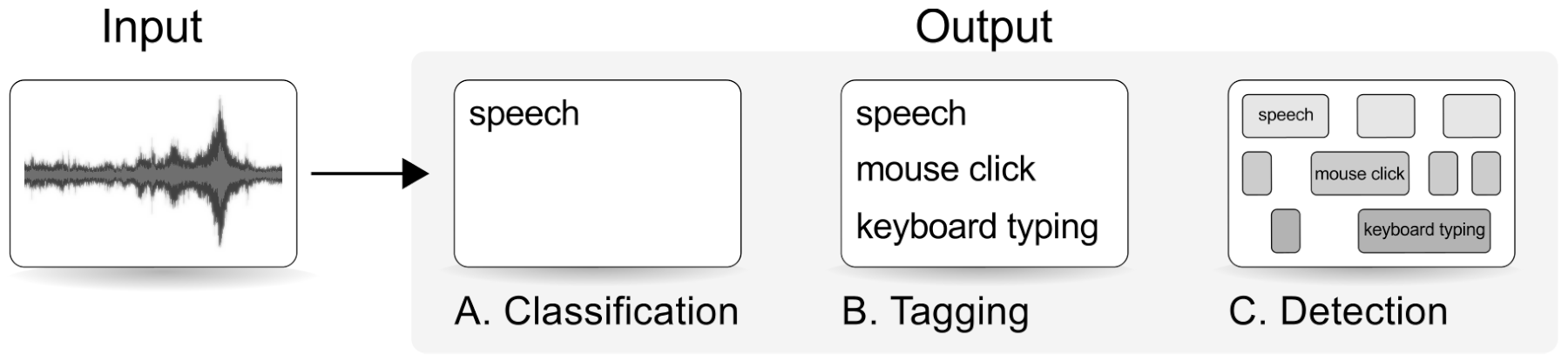

Sound Event Detection

Given input audio return the timestamps (start, end) for each event class

Events and non-events

Events are sounds with a clearly-defined duration or onset.

| Event (time limited) | Class (continious) |

|---|---|

| Car passing | Car traffic |

| Honk | Car traffic |

| Word | Speech |

| Gunshot | Shooting |

Application

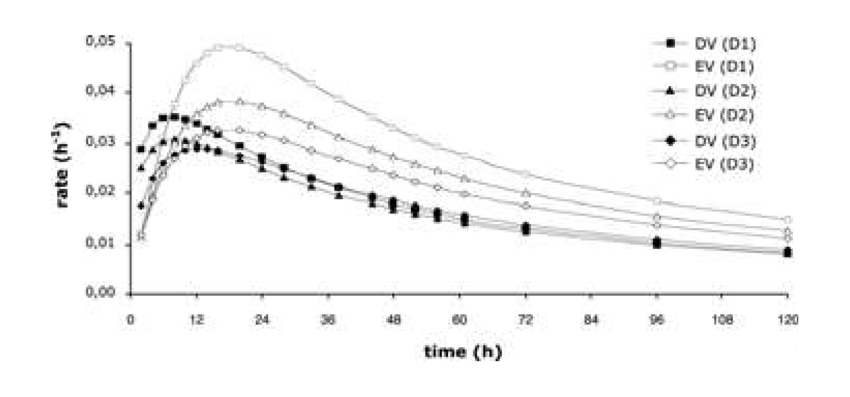

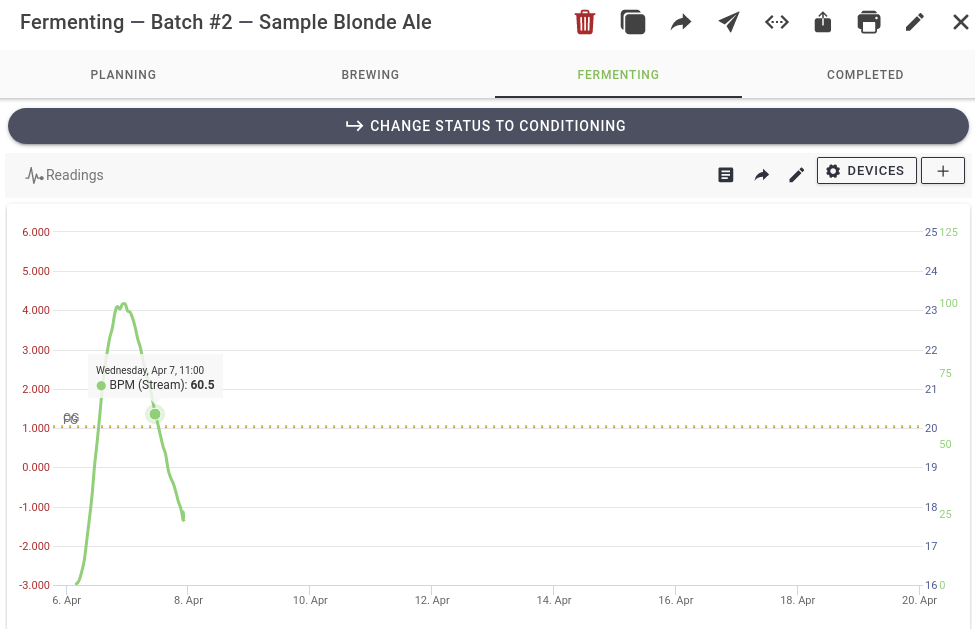

Fermentation tracking when making alcoholic beverages. Beer, Cider, Wine, etc.

Alcohol is produced via fermentation

Airlock activity

Fermentation tracking

Fermentation activity can be tracked as Bubbles Per Minute (BPM).

Our goal

Make a system that can track fermentation activity, outputting Bubbles per Minute (BPM), by capturing airlock sound using a microphone, using Machine Learning to count each “plop”

Machine Learning needs Data!

Supervised Machine Learning

Data requirements: Quantity

Need enough data.

| Instances per class | Suitability |

|---|---|

| 100 | Minimal |

| 1000 | Good |

| 10000+ | Very good |

Data requirements: Quality

Need realistic data. Capturing natural variation in

- the event sound

- recording devices used

- recording environment

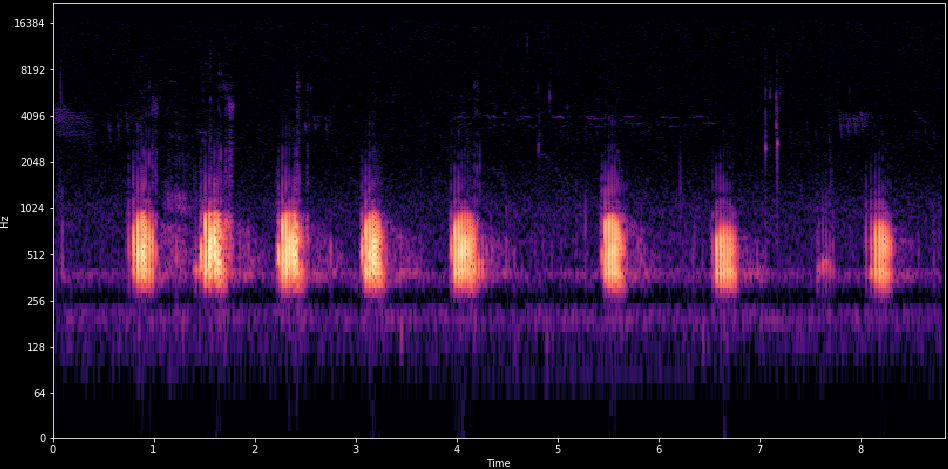

Check the data

Understand the data

Note down characteristics of the sound

- Event length

- Distance between events

- Variation in the event sound

- Changes over time

- Differences between recordings

- Background noises

- Other events that could be easily confused

Labeling data manually using Audacity

Machine Learning system

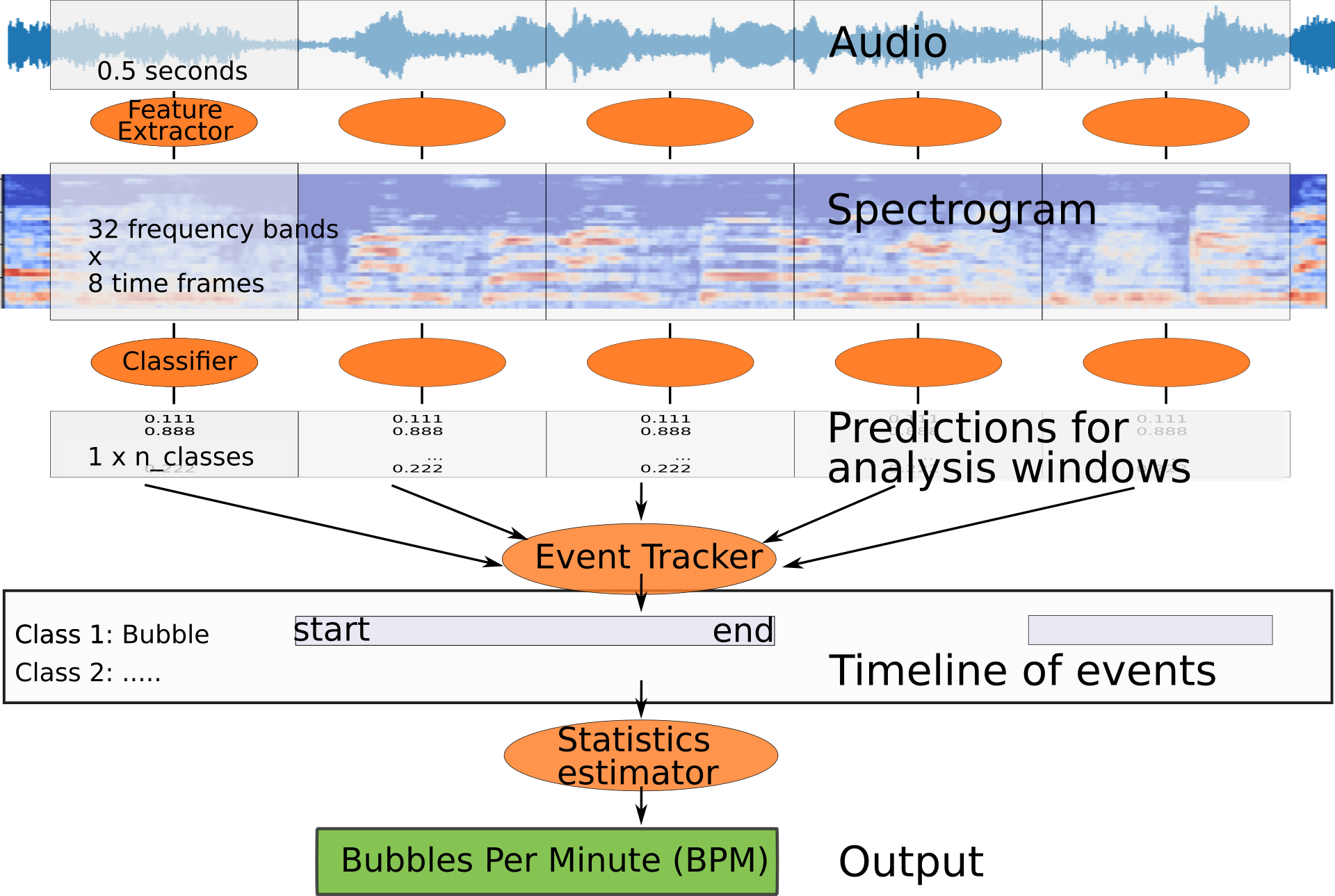

Audio ML pipeline overview

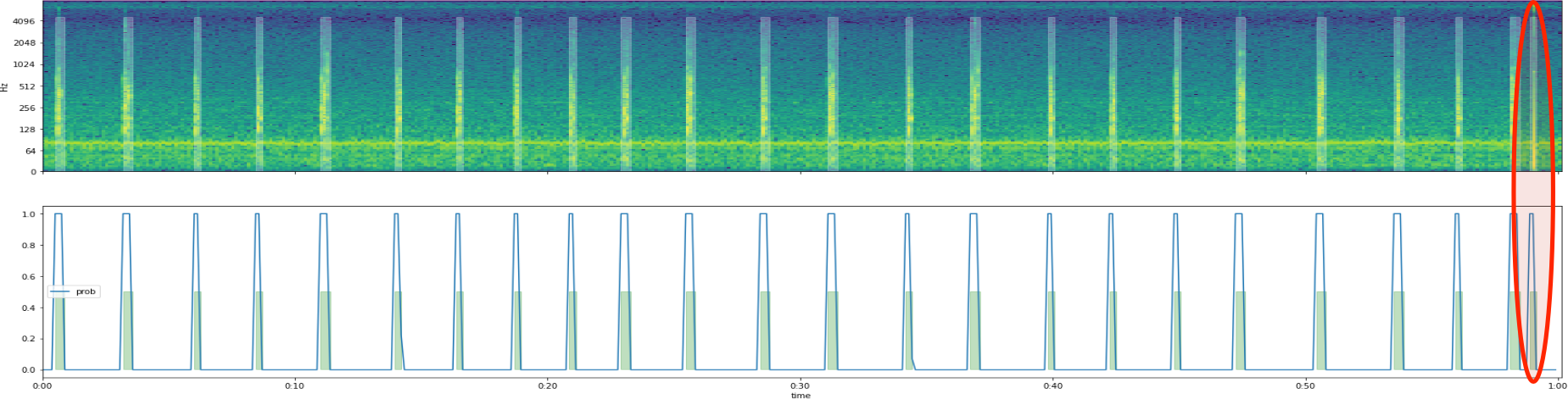

Spectrogram

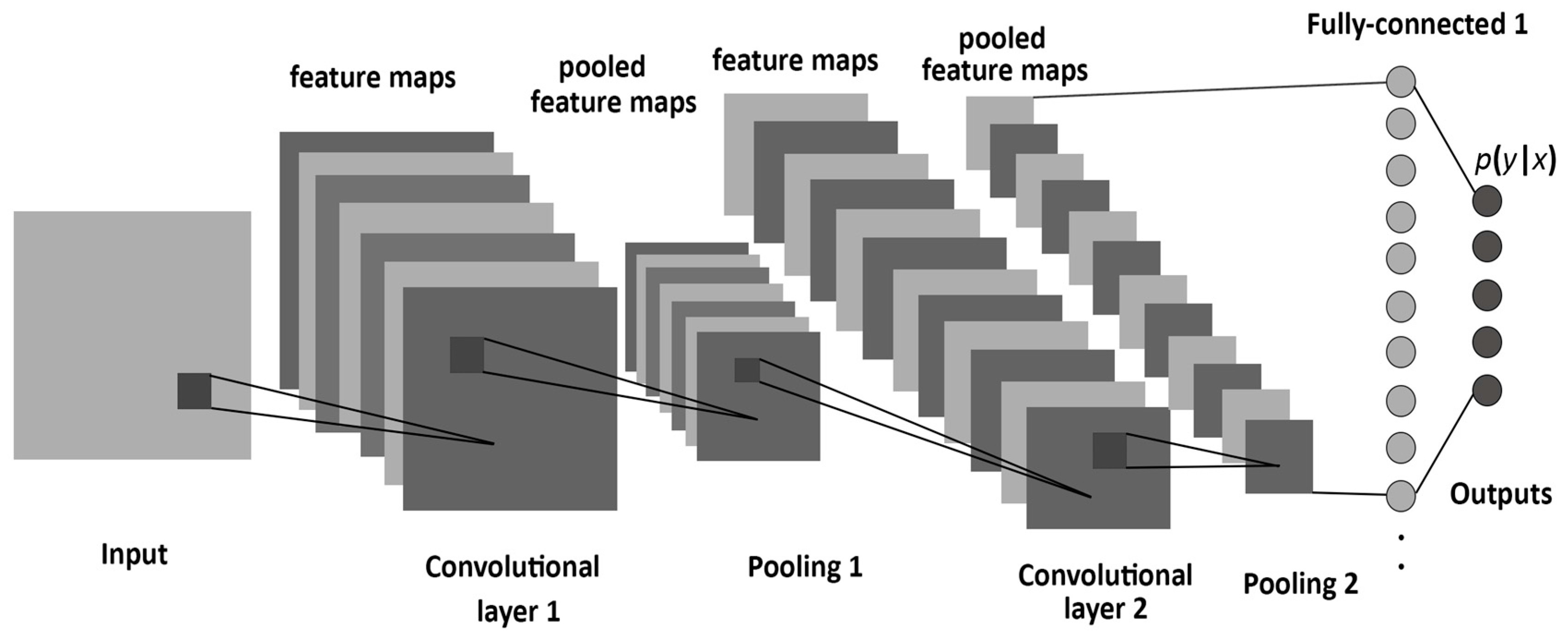

CNN classifier model

Evaluation

Event Tracker

Converting to discrete list of events

- Threshold the probability from classifier

- Keep track of whether we are currently in an event or not

Statistics Estimator

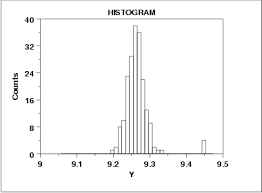

To compute the Bubbles Per Minute

- Using the typical time-between-events

- Assumes regularity

- Median more robust against outliers

Tracking over time using Brewfather

Outro

More resources

Github project: jonnor/brewing-audio-event-detection

General Audio ML: jonnor/machinehearing

- Sound Event Detection: A tutorial. Virtanen et al.

- Audio Classification with Machine Learning (EuroPython 2019)

- Environmental Noise Classification on Microcontrollers (TinyML 2021)

Slack: Sound of AI community

What do you want make?

Now that you know the basics of Audio Event Detection with Machine Learning in Python.

- Popcorn popping

- Bird call

- Cough

- Umm/aaa speech patterns

- Drum hits

- Car passing

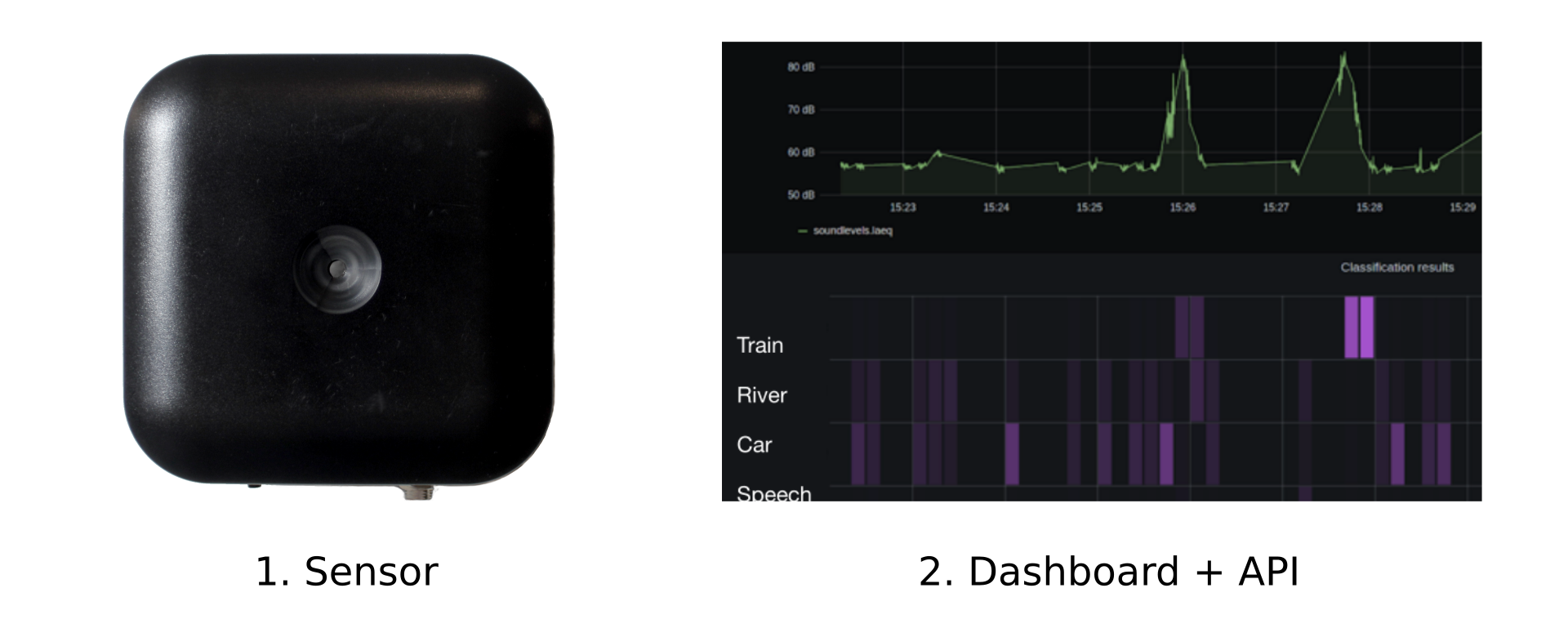

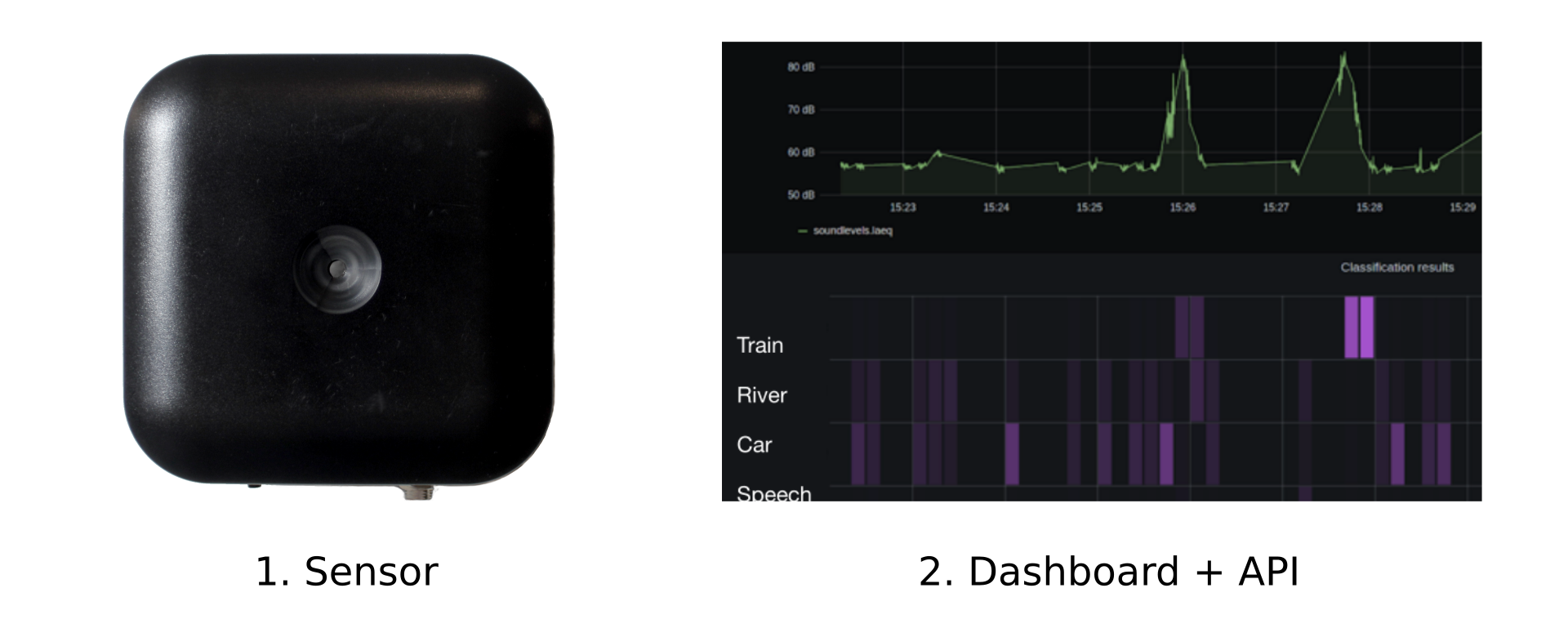

Continious Monitoring using Audio ML

Want to deploy Continious Monitoring with Audio? Consider using the Soundsensing sensors and data-platform.

Get in Touch! contact@soundsensing.no

Join Soundsensing

Want to work on Audio Machine Learning in Python? We have many opportunities.

- Full-time positions

- Part-time / freelance work

- Engineering thesis

- Internships

- Research or industry partnerships

Get in Touch! contact@soundsensing.no

Questions ?

Sound Event Detection with Machine Learning EuroPython 2021

Jon Nordby jon@soundsensing.no Head of Data Science & Machine Learning

Bonus

Bonus slides after this point

Semi-automatic labelling

Using a Gaussian Mixture, Hidden Markov Model (GMM-HMM)

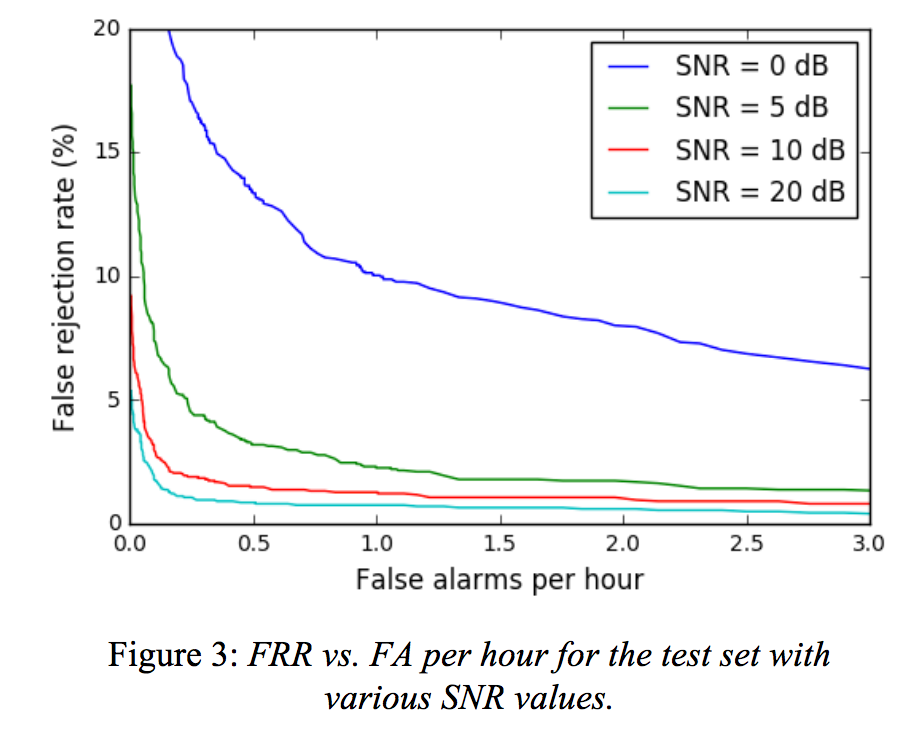

Synthesize data

How to get more datawithout gathering “in the wild”?

- Mix in diffent kinds of background noise.

- Vary Signal to Noise ratio etc

- Useful to estimate performance on tricky, not-yet-seen data

- Can be used to compensate for small amount of training data

- scaper Python library: github.com/justinsalamon/scaper

Streaming inference

Key: Chopping up incoming stream into (overlapping) audio windows

import sounddevice, queue

# Setup audio stream from microphone

audio_queue = queue.Queue()

def audio_callback(indata, frames, time, status):

audio_queue.put(indata.copy())

stream = sounddevice.InputStream(callback=audio_callback, ...)

...

# In classification loop

data = audio_queue.get()

# shift old audio over, add new data

audio_buffer = numpy.roll(audio_buffer, len(data), axis=0)

audio_buffer[len(audio_buffer)-len(data):len(audio_buffer)] = data

new_samples += len(data)

# check if we have received enough new data to do new prediction

if new_samples >= hop_length:

p = model.predict(audio_buffer)

if p < threshold:

print(f'EVENT DETECTED time={datetime.datetime.now()}')Event Detection with Weakly Labeled data

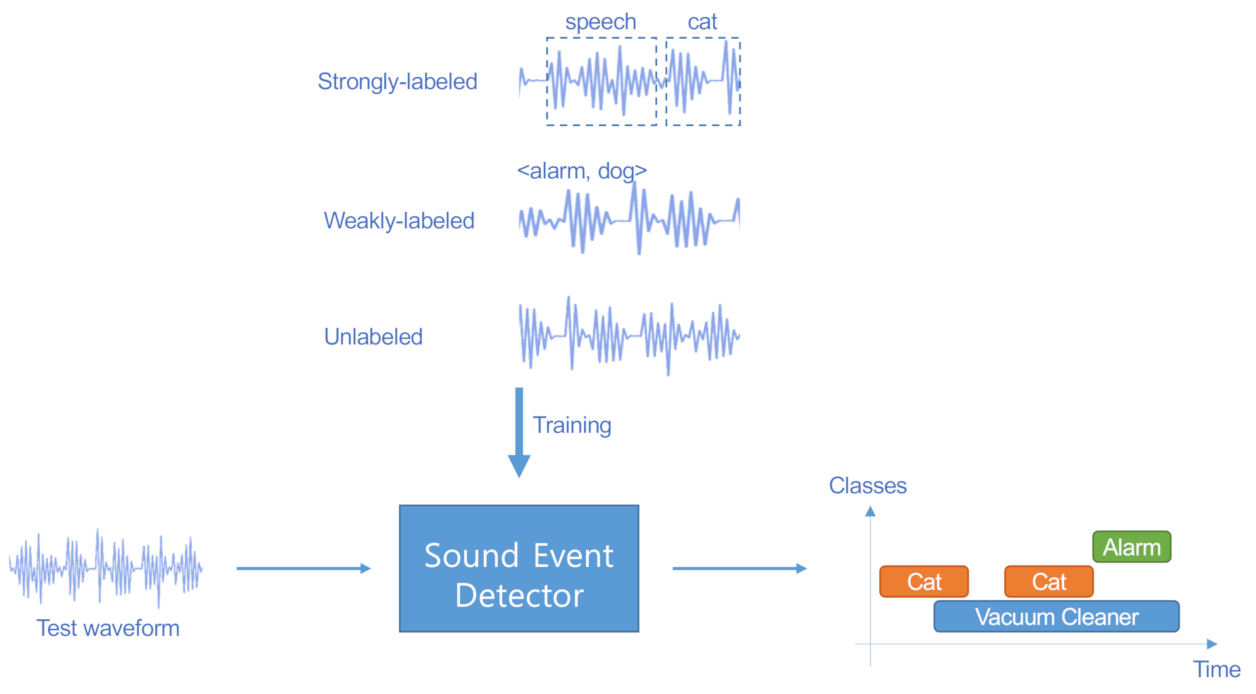

Can one learn Sound Event Detection without annotating the times for each event? Yes!

- Referred to as weekly labeled Sound Event Detection

- Can be tackled with Multiple Instance Learning

- Inputs: Audio clips consisting of 0-N events

- Labels: True if any events in clip, else false

- Multiple analysis windows per 1 label

- Using temporal pooling in Neural Network

Data collection via Youtube

Criteria for inclusion:

- Preferably couple of minutes long, minimum 15 seconds

- No talking to the camera

- Mostly stationary camera

- No audio editing/effects

- One or more airlocks bubbling

- Bubbling can be heard by ear

Approx 1000 videos reviewed, 100 usable

Characteristics of Audio Events

- Duration

- Tonal/atonal

- Temporal patterns

- Percussive

- Frequency content

- Temporal envelope

- Foreground vs background

- Signal to Noise Ratio

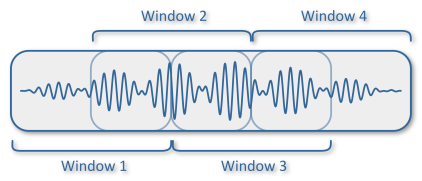

Analysis windows

Window length bit longer than the event length.

Overlapping gives classifier multiple chances at seeing each event.

Reducing overlap increases resolution! Overlap for AES: 10%