Classifying sound using Machine Learning

Jon Nordby jon@soundsensing.no

February 27, 2020

Introduction

Jon Nordby

Internet of Things specialist

- B.Eng in Electronics

- 10 years as Software developer. Embedded + Web

- M. Sc in Data Science

Now:

- CTO at Soundsensing

Thesis

Environmental Sound Classification on Microcontrollers using Convolutional Neural Networks

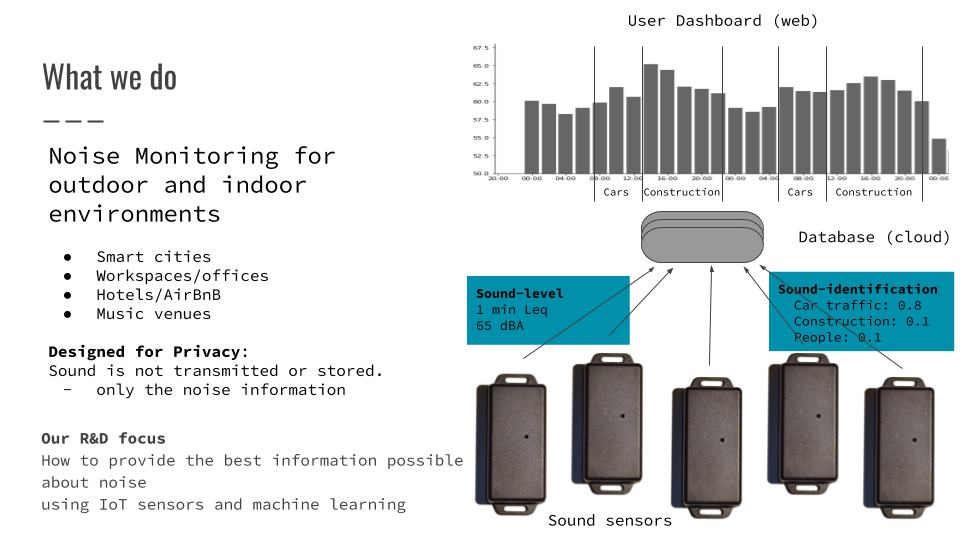

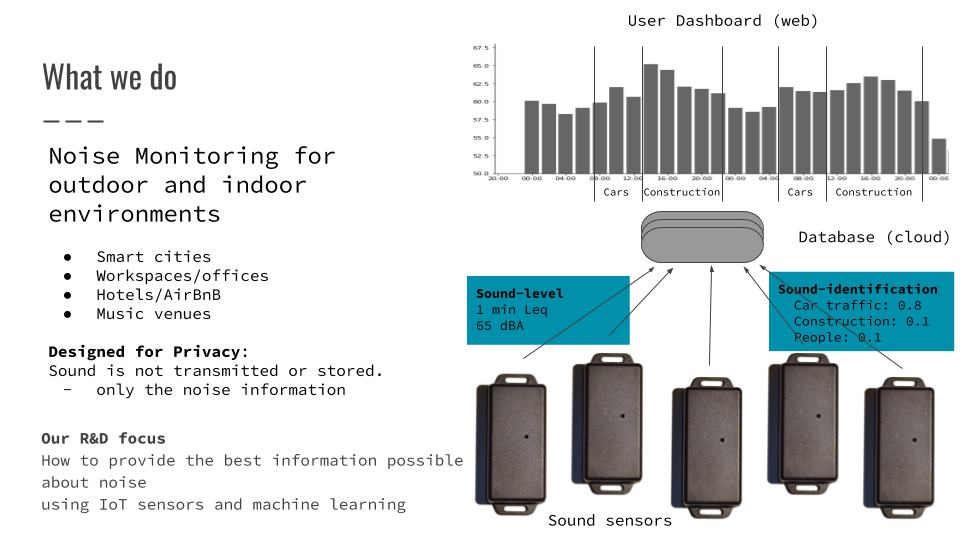

Soundsensing

Dashboard

Goal

The goals of this talk

you as Developers, understand:

possibilities and applications of Audio ML

overall workflow of creating an Audio Classification solution

what Soundsensing provides in this area

Background

Why Audio Classification

- Rich source of information

- Any physical motion creates sound

- Sound spreads in a space

- Enables non invasive sensing

- Great compliment to image/video

- Humans use our hearing usefully in many tasks

- Machines can now reach similar performance

Applications

Audio sub-fields

- Speech Recognition. Keyword spotting.

- Music Analysis. Genre classification.

- General / other

Examples

- Eco-acoustics. Analyze bird migrations

- Wildlife preservation. Detect poachers in protected areas

- Manufacturing Quality Control. Testing electric car seat motors

- Security: Highlighting CCTV feeds with verbal agression

- Medical. Detect heart murmurs

- Process industry. Advance process once audible event happens (popcorn)

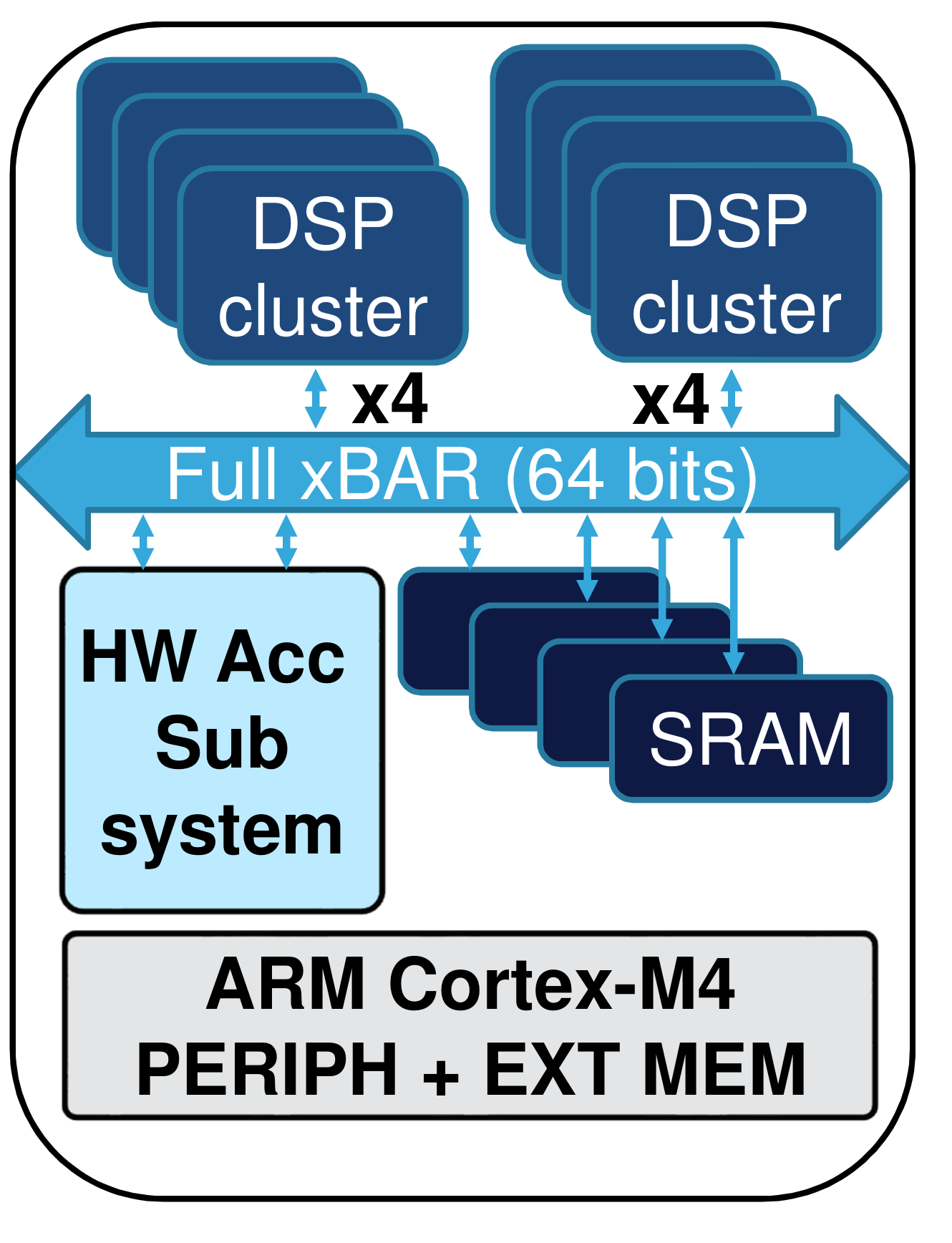

Neural Network co-processors

Expected 10x power efficiency increases.

Making an Audio ML solution

Overall process

- Problem definition

- Data collection

- Data labeling

- Training setup

- Feature representation

- Model

- Evaluation

- Deployment

Example task

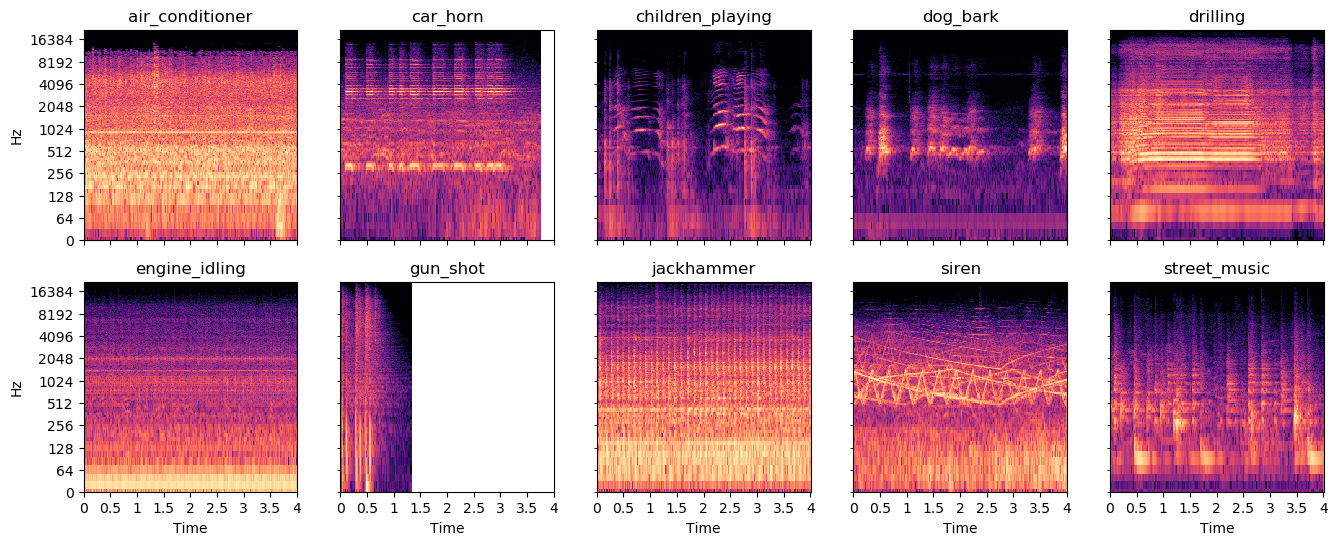

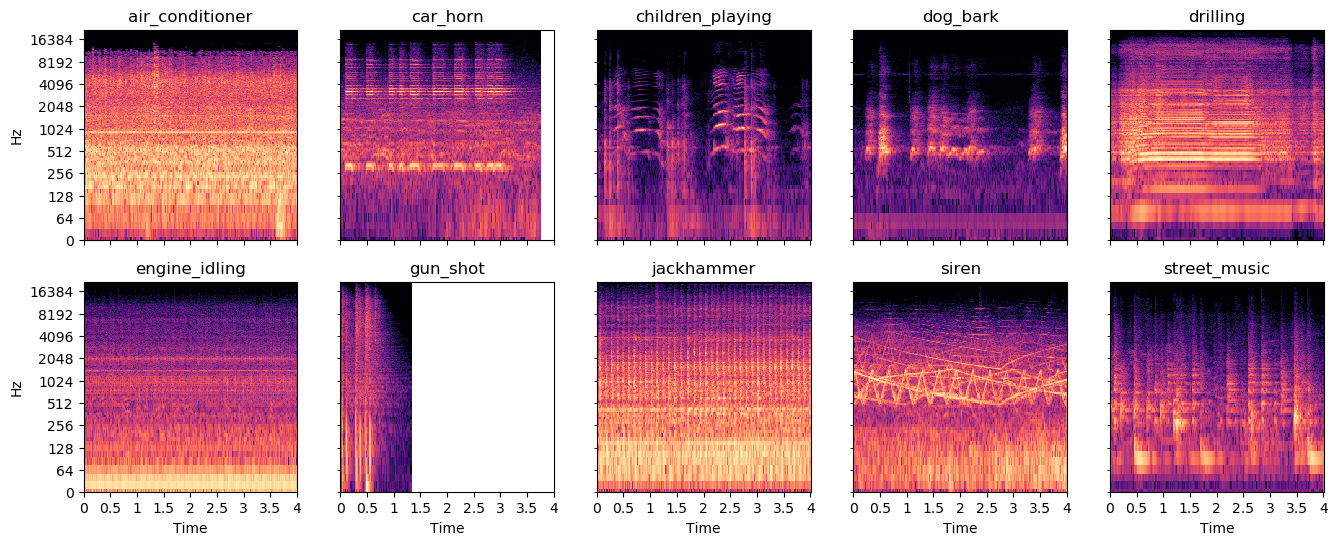

Noise Classification in Urban environments. AKA “Environmental Sound Classification”

Given an audio signal of environmental sounds,

determine which class it belongs to

- Widely researched. 1000 hits on Google Scholar

- Open Datasets. Urbansound8k (10 classes), ESC-50, AudioSet (632 classes)

- 2017: Human-level performance on ESC-50

Supervised Learning

Learning process

Audio Classification

Given an audio clip

with some sounds

determine which class it is

Classification simplifications

- Single output. One class at a time

- Discrete. Exists or not

- Closed set. Must be known class

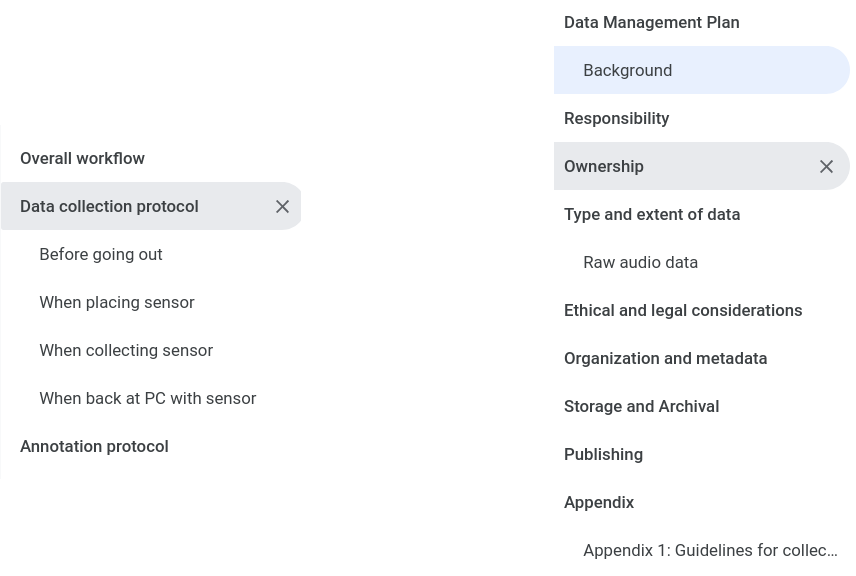

Data collection

Key challenges

- Ensuring representativeness

- Ensuring coverage

- Maintaining structure

- Capturing relevant metadata

- Maintaining privacy

Data management

Best practice: Design the process, document in a protocol

Data Requirements

Depends on problem difficulty

- Number of classes

- Variation inside class

- Distance between classes

- Other sounds, outside classes

Targets

- Must: >100 labeled instances per class

- Want: >1000 labeled instances per class

Urbansound8k: 10 classes, 11 hours of annotated audio

Data labeling

Challenge: Keeping quality high, and costs low

- What is the human level performance?

- Annotator self-agreement

- Inter-annotator agreement

How to label

- Outsource. Data labeling services, Mechanical Turk, ..

- Crowdsource. From the public. From your users?

- Inhouse. Dedicated resource? Part of DS team?

- Automated. Other datasources? Existing models?

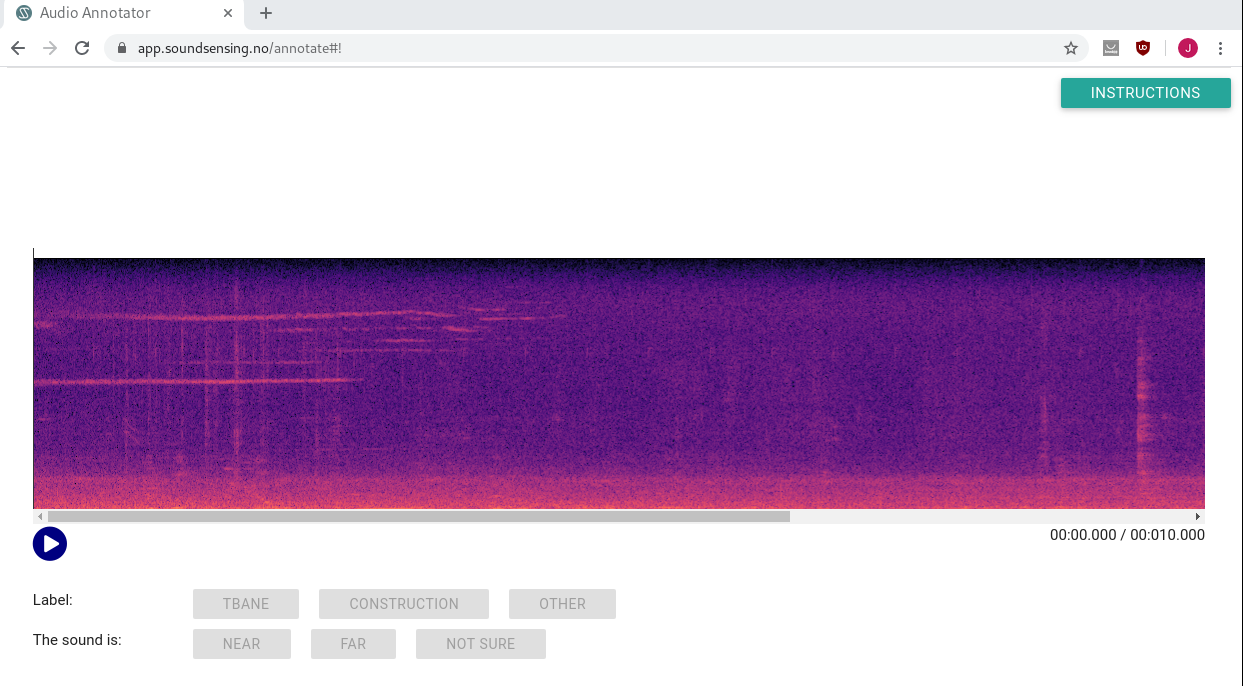

Annotation tools

Curated dataset

Pipeline

Convolutional Neural Network

Model

Evaluation

Summary

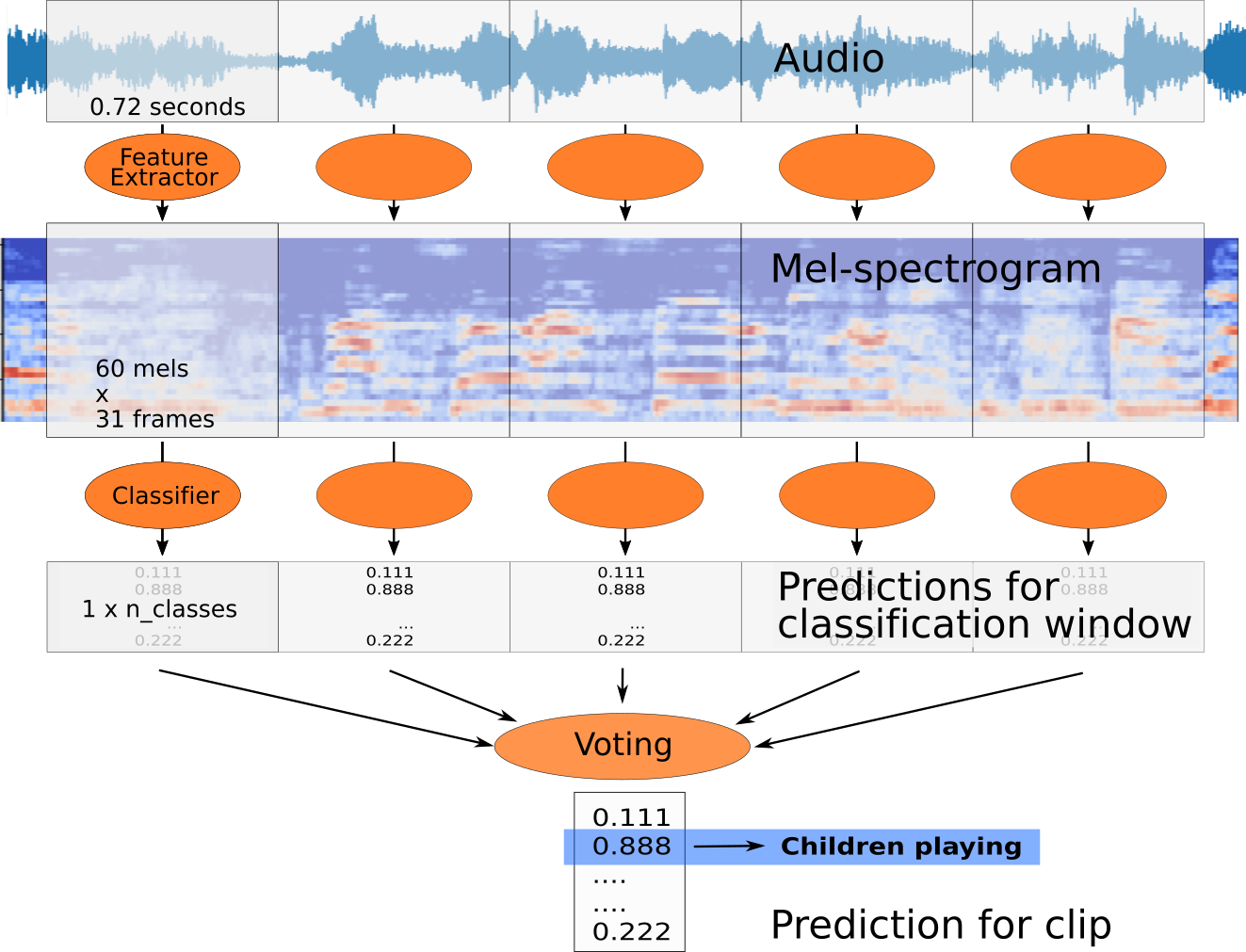

Standard models exist

Try the standard audio pipeline, it often does OK.

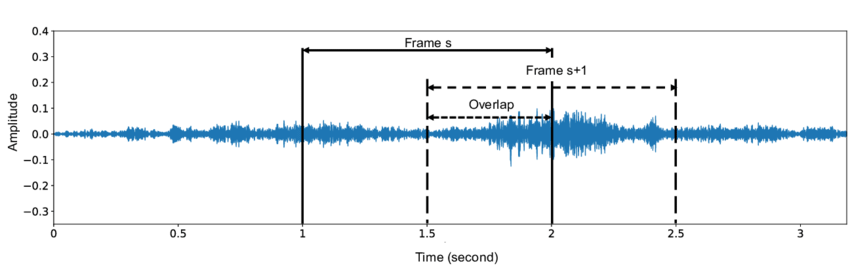

- Fixed-length analysis windows

- Use log-mel spectrograms as features

- Convolutional Neural Network as classifier

- Aggregate prediction from each window

All available as open source solutions.

Data collection is not magic

- Structure collection upfront

- Budget resources for collection and labeling

- Integrate quality checking

- Build it up gradually

Doable, but takes time!

Now what

So have a model that performs Audio Classification on our PC.

But we want to monitor a real-world phenomenon.

How to deploy this?

Deploying

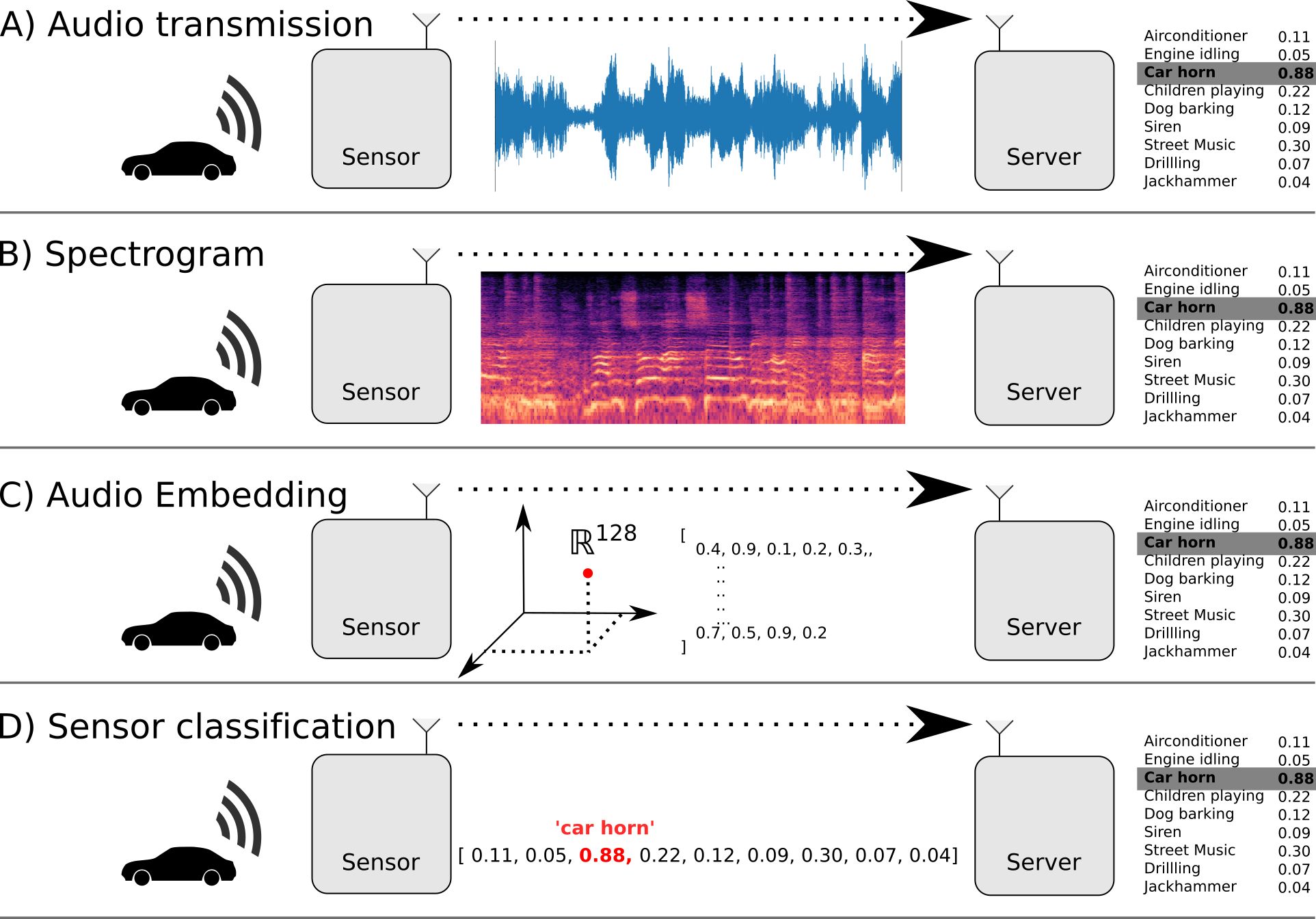

Architectures

Soundsensing Platform

Demo video

Noise Monitoring solution

Outro

Partner opportunities

We are building a partner network

- Partner role: Deliver solutions and integration on platform

- Especially cases that require a lot of custom work

Email: jon@soundsensing.no

Employment opportunities

Think that this sounds cool to work on?

- Internships in Data Science now

- Hiring 2 developers in 2020

Email: jon@soundsensing.no

Investment opportunities

Want to invest in a Machine Learning and Internet of Things startup?

- Funding round open now

- 60% comitted

- Some open slots still

Email: ole@soundsensing.no

More resources

Machine Hearing. ML on Audio

Machine Learning for Embedded / IoT

Thesis Report & Code

Soundsensing

Questions

?

Email: jon@soundsensing.no

BONUS

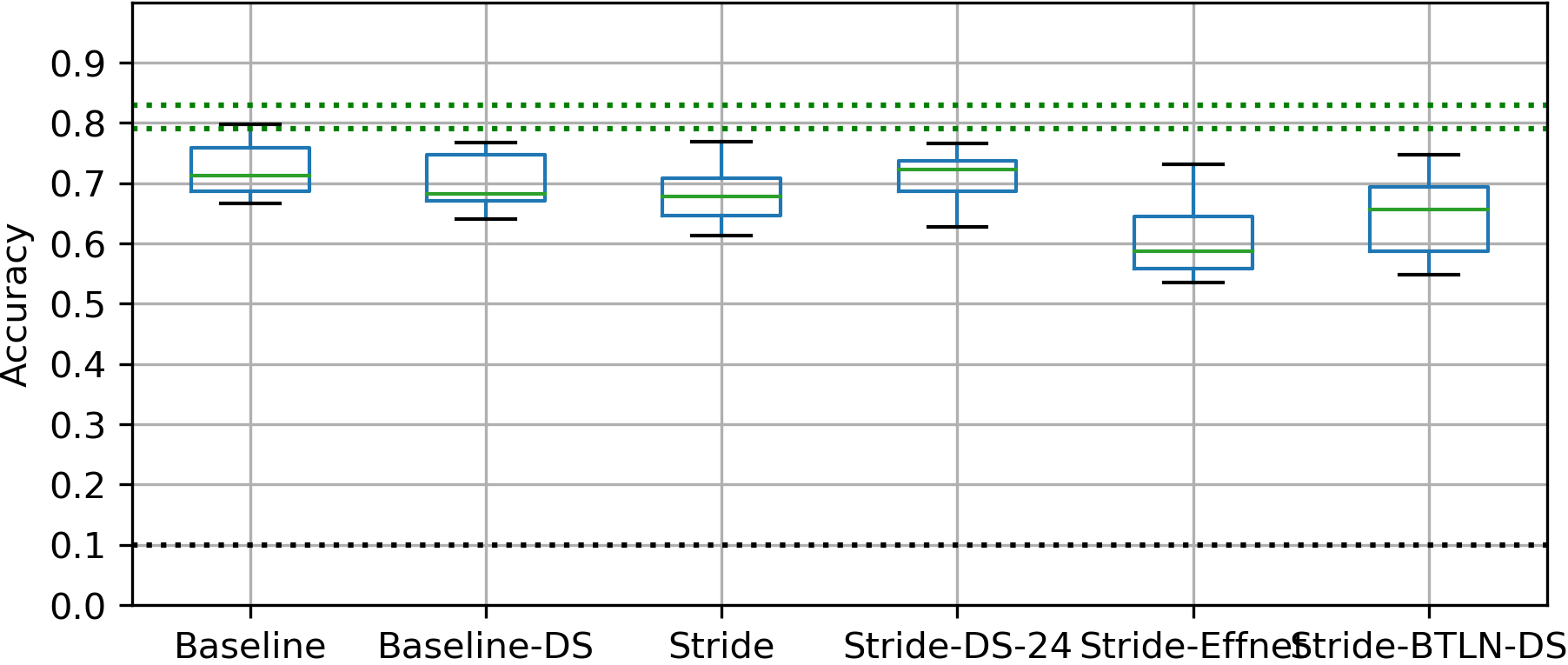

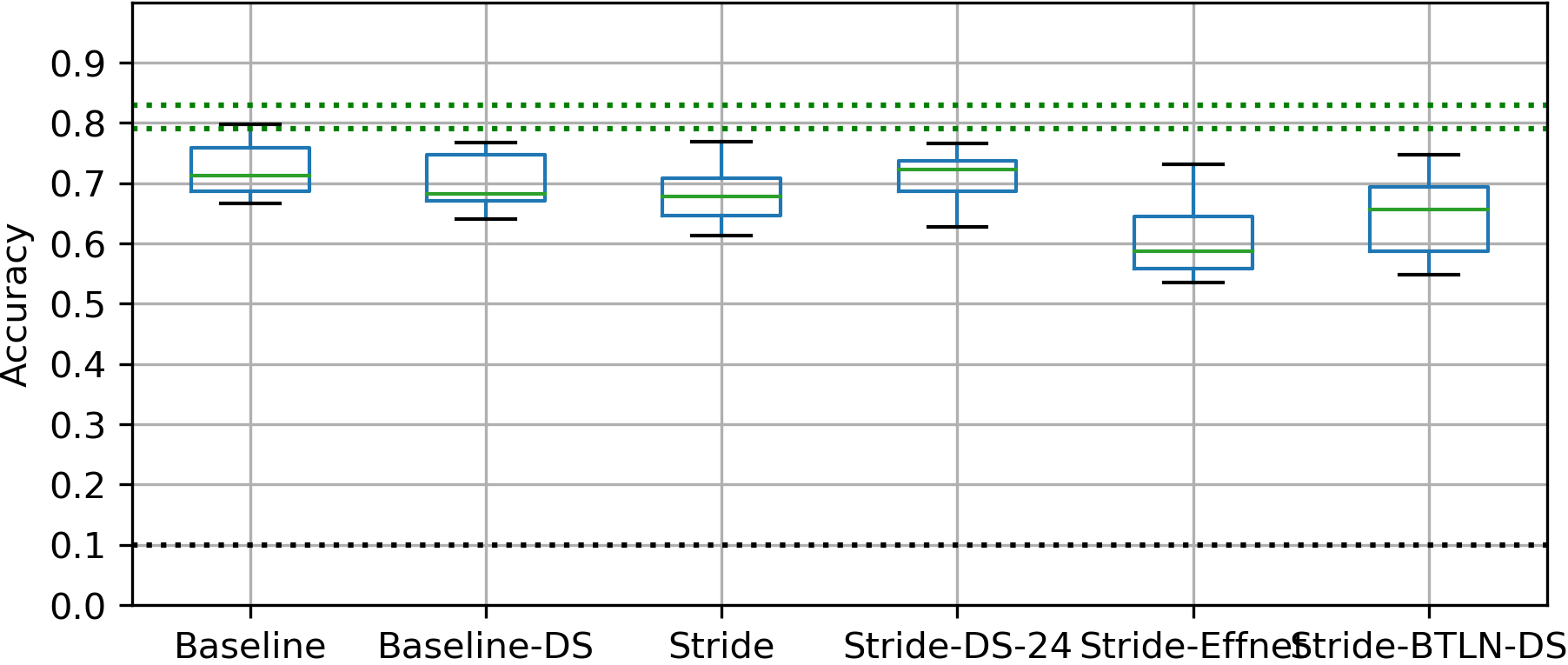

Thesis results

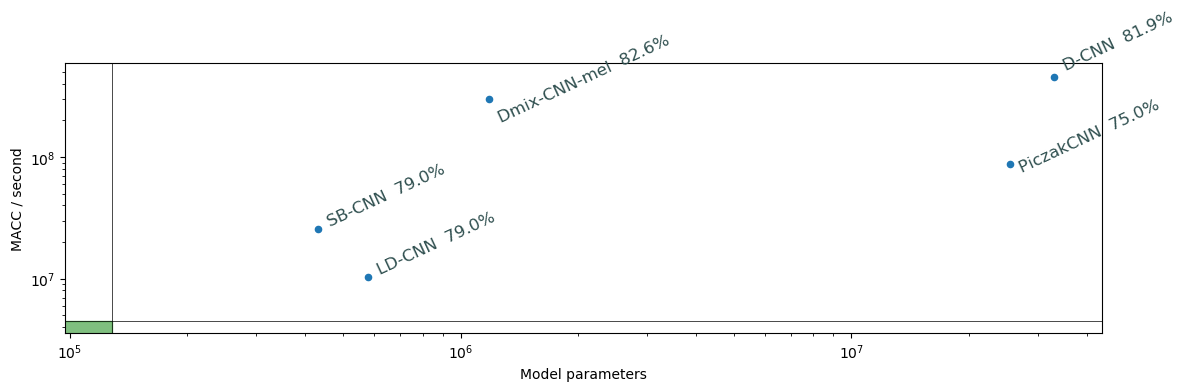

Model comparison

List of results

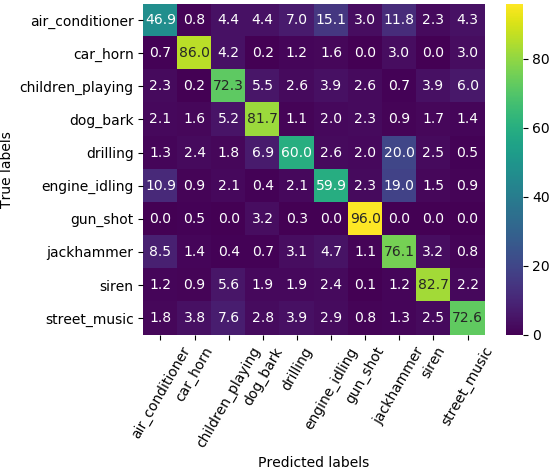

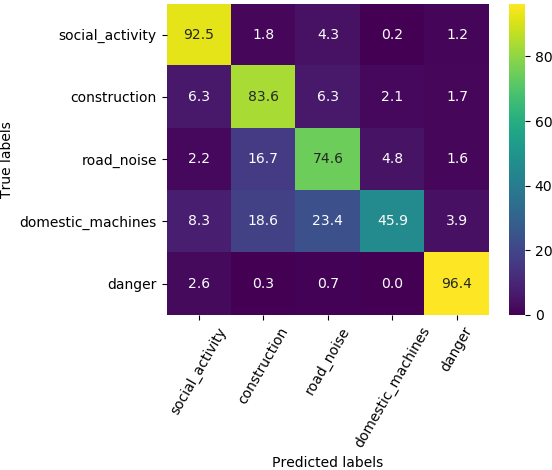

Confusion

Grouped classification

Foreground-only

Unknown class

Background

Mel-spectrogram

Noise Pollution

Reduces health due to stress and loss of sleep

In Norway

- 1.9 million affected by road noise (2014, SSB)

- 10’000 healty years lost per year (Folkehelseinstituttet)

In Europe

- 13 million suffering from sleep disturbance (EEA)

- 900’000 DALY lost (WHO)

Noise Mapping

Simulation only, no direct measurements

Audio Machine Learning on low-power sensors

Wireless Sensor Networks

- Want: Wide and dense coverage

- Need: Sensors need to be low-cost

- Opportunity: Wireless reduces costs

- Challenge: Power consumption

What do you mean by low-power?

Want: 1 year lifetime for palm-sized battery

Need: <1mW system power

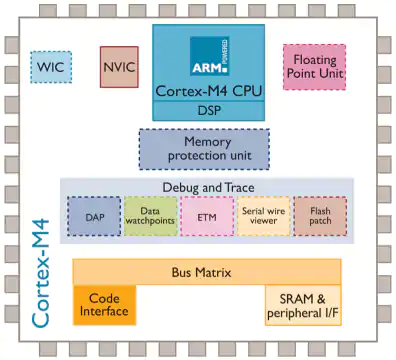

General purpose microcontroller

STM32L4 @ 80 MHz. Approx 10 mW.

- TensorFlow Lite for Microcontrollers (Google)

- ST X-CUBE-AI (ST Microelectronics)

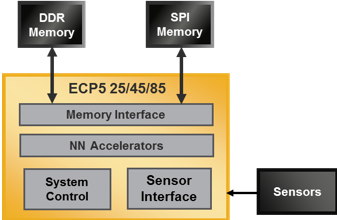

FPGA

Human presence detection. VGG8 on 64x64 RGB image, 5 FPS: 7 mW.

Audio ML approx 1 mW

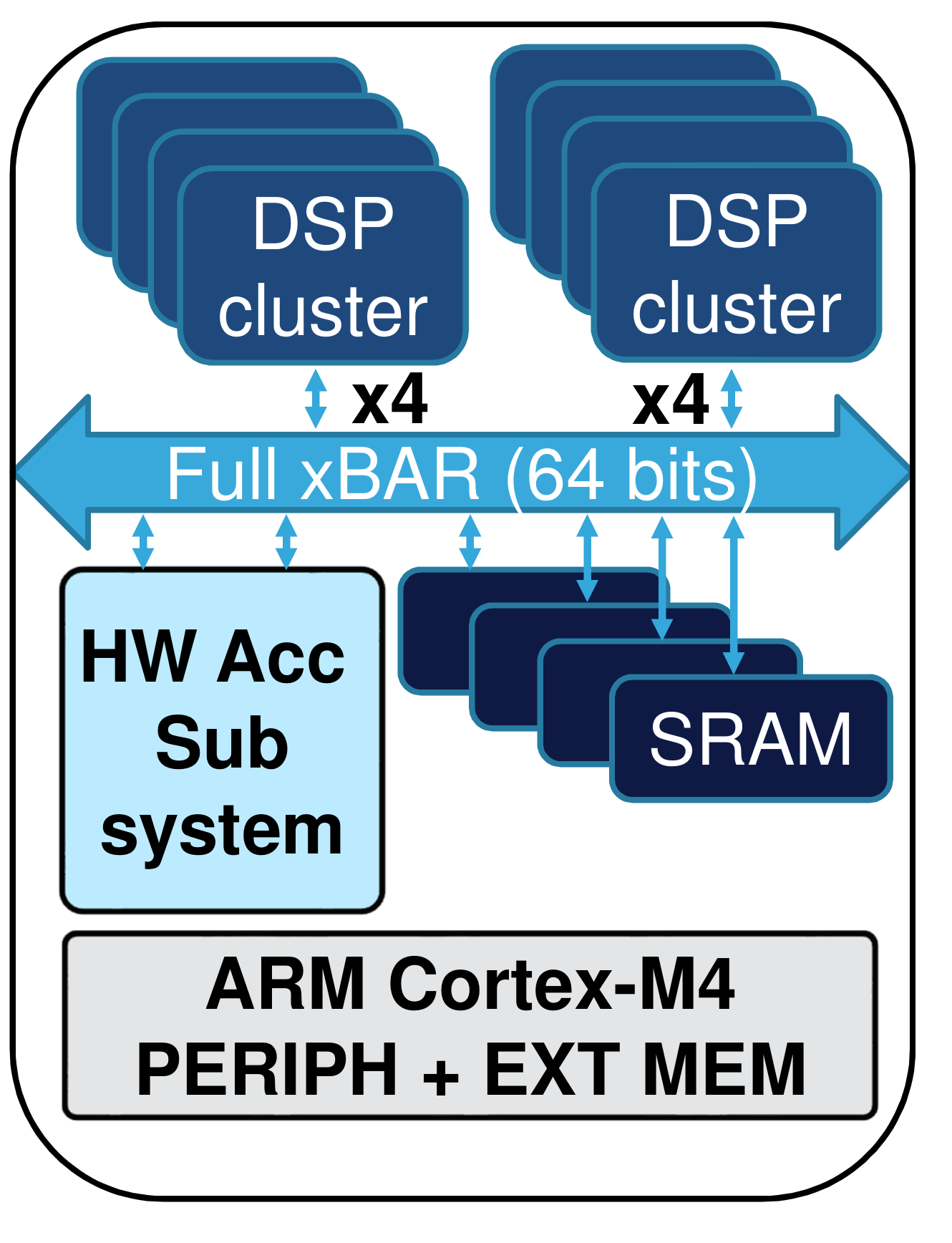

Neural Network co-processors micro

2.9 TOPS/W. AlexNet, 1000 classes, 10 FPS. 41 mWatt

Audio models probably < 1 mWatt.

Urbansound8k

Environmental Sound Classification on edge

Existing work

- Convolutional Neural Networks dominate

- Techniques come from image classification

- Mel-spectrogram input standard

- End2end models: getting close in accuracy

- “Edge ML” focused on mobile-phone class HW

- “Tiny ML” (sensors) just starting

Model requirements

With 50% of STM32L476 capacity:

- 64 kB RAM

- 512 kB FLASH memory

- 4.5 M MACC/second

Existing models

eGRU: running on ARM Cortex-M0 microcontroller, accuracy 61% with non-standard evaluation

Research Topics

Waveform input to model

- Preprocessing. Mel-spectrogram: 60 milliseconds

- CNN. Stride-DS-24: 81 milliseconds

- With quantization, spectrogram conversion is the bottleneck!

- Convolutions can be used to learn a Time-Frequency transformation.

Can this be faster than the standard FFT? And still perform well?

On-sensor inference challenges

- Reducing power consumption. Adaptive sampling

- Efficient training data collection in WSN. Active Learning?

- Real-life performance evaluations. Out-of-domain samples

Shrinking a Convolutional Neural Network

Reduce input dimensionality

- Lower frequency range

- Lower frequency resolution

- Lower time duration in window

- Lower time resolution

Reduce overlap

Models in literature use 95% overlap or more. 20x penalty in inference time!

Often low performance benefit. Use 0% (1x) or 50% (2x).

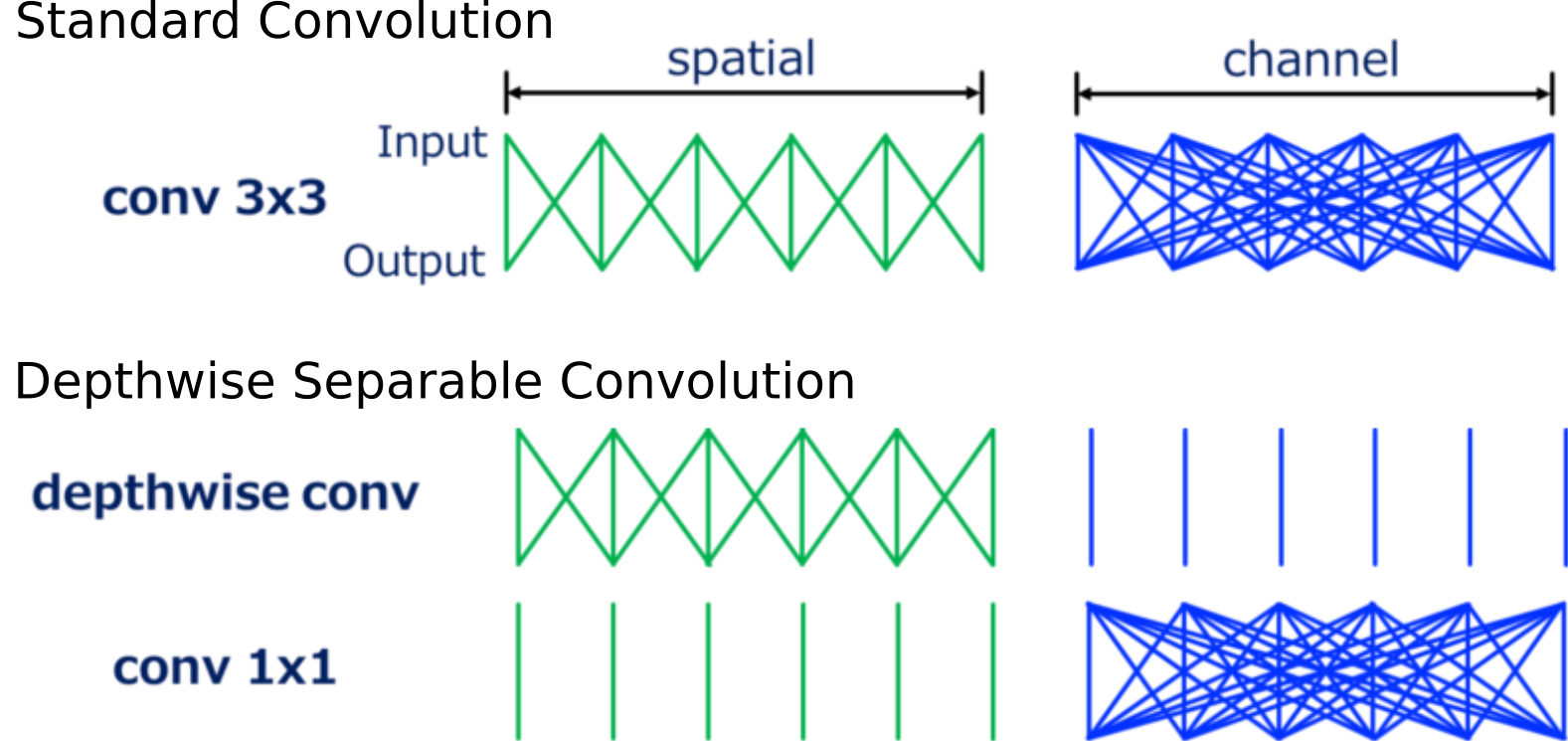

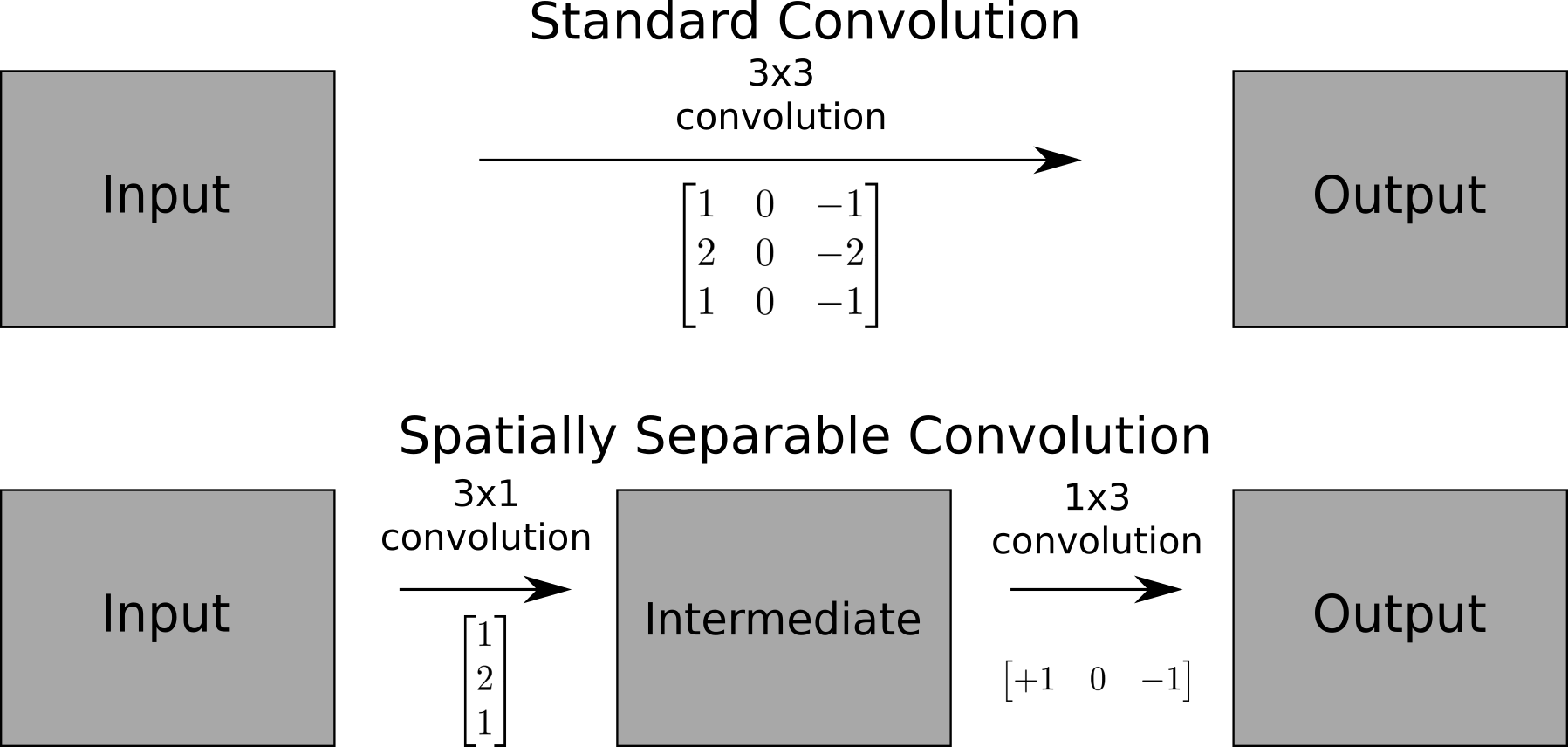

Depthwise-separable Convolution

MobileNet, “Hello Edge”, AclNet. 3x3 kernel,64 filters: 7.5x speedup

Spatially-separable Convolution

EffNet, LD-CNN. 5x5 kernel: 2.5x speedup

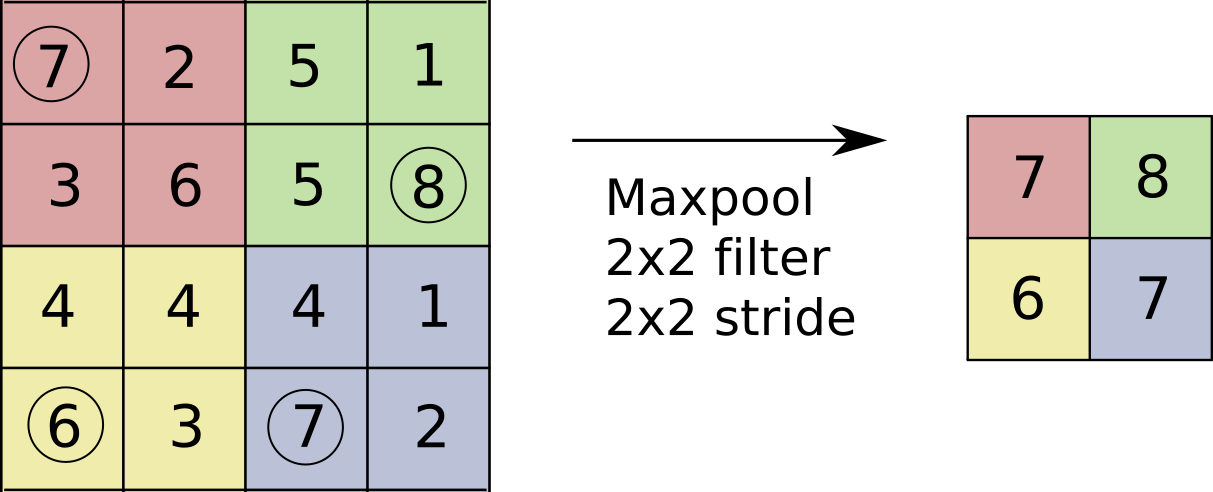

Downsampling using max-pooling

Wasteful? Computing convolutions, then throwing away 3/4 of results!

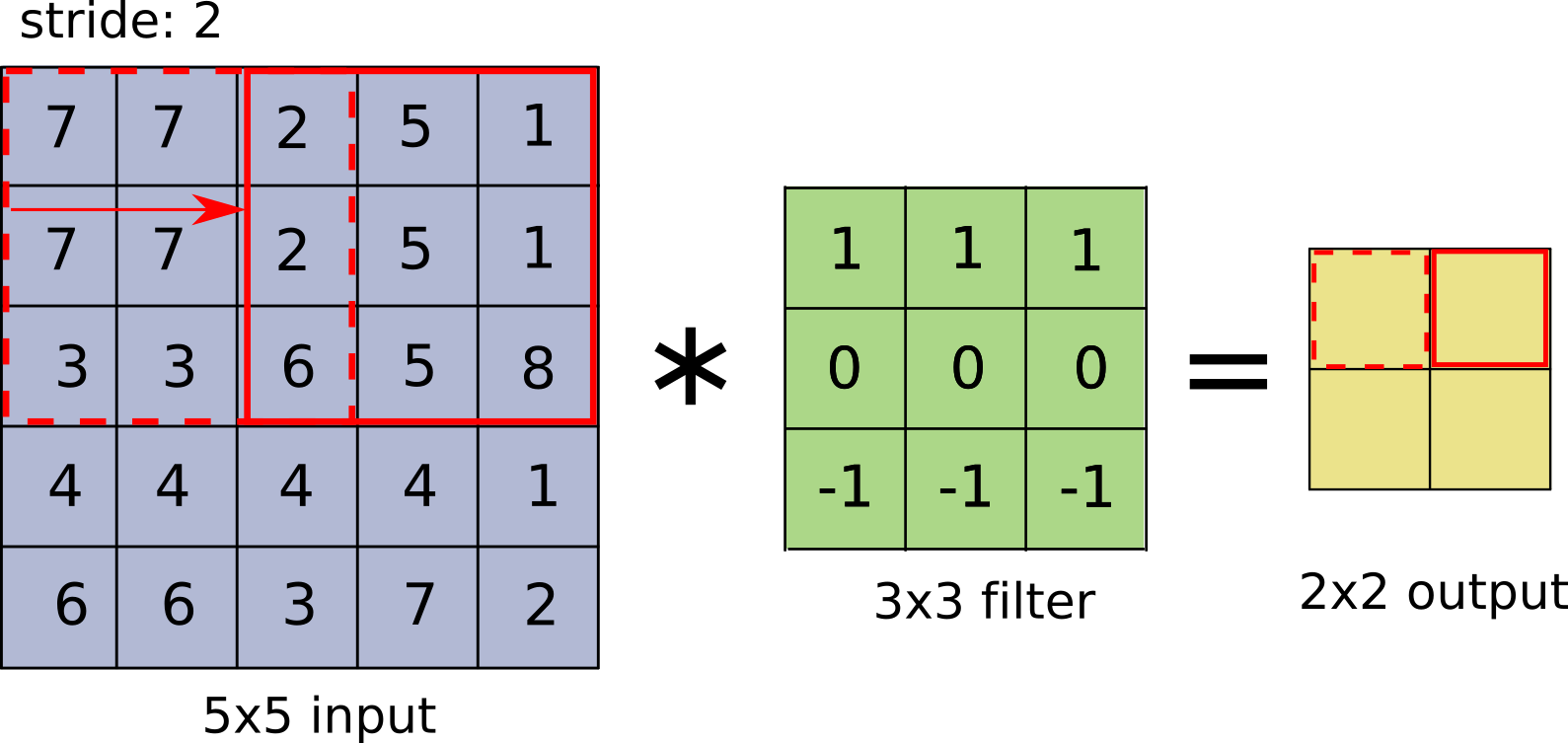

Downsampling using strided convolution

Striding means fewer computations and “learned” downsampling

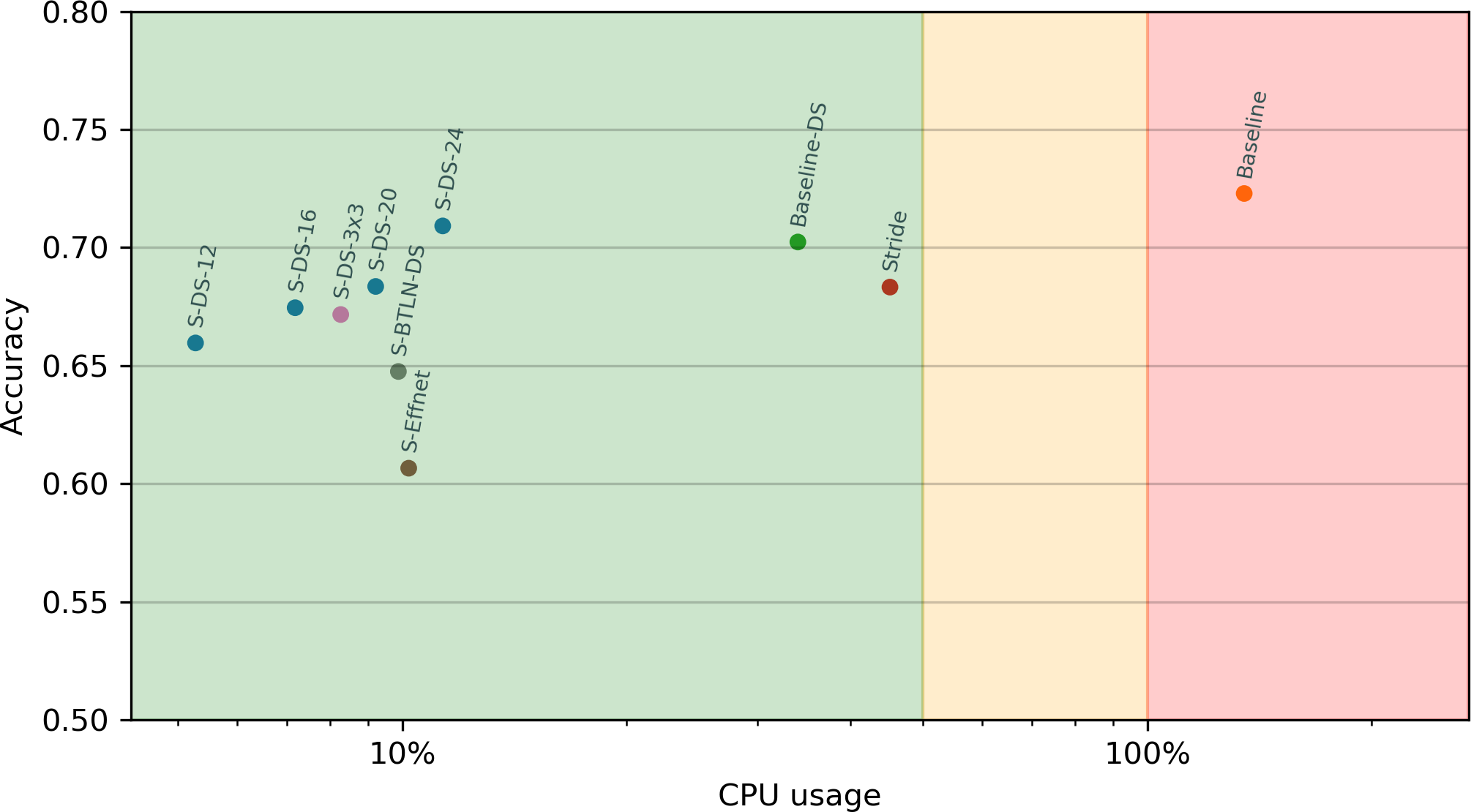

Model comparison

Performance vs compute

Quantization

Inference can often use 8 bit integers instead of 32 bit floats

- 1/4 the size for weights (FLASH) and activations (RAM)

- 8bit SIMD on ARM Cortex M4F: 1/4 the inference time

- Supported in X-CUBE-AI 4.x (July 2019)