Classification of Environmental Sound using IoT sensors

Jon Nordby jon@soundsensing.no

November 19, 2019

Introduction

Jon Nordby

Internet of Things specialist

- B.Eng in Electronics

- 9 years as Software developer. Embedded + Web

- M. Sc in Data Science

Now:

- CTO at Soundsensing

- Machine Learning Consultant

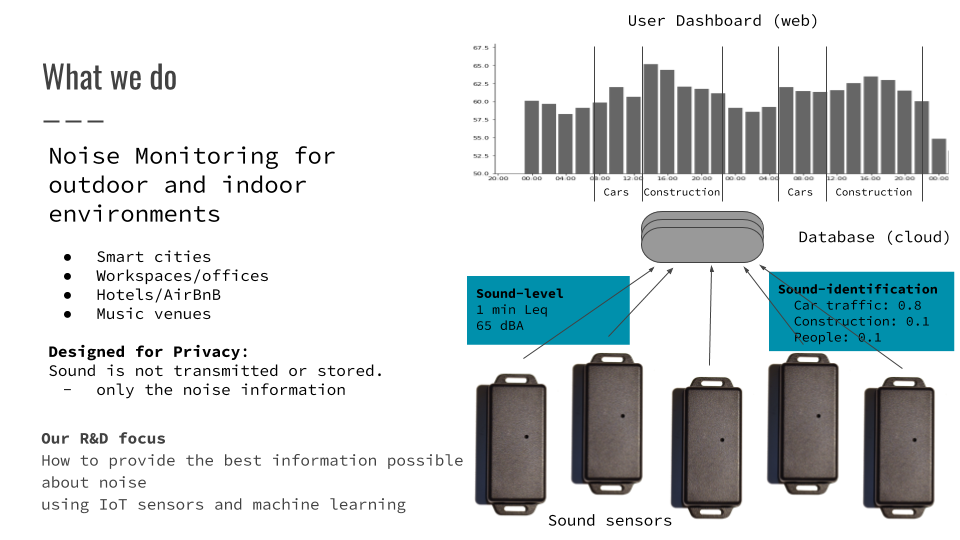

Soundsensing

Dashboard

Thesis

Environmental Sound Classification on Microcontrollers using Convolutional Neural Networks

Wireless Sensor Networks

- Want: Wide and dense coverage

- Need: Sensors need to be low-cost

- Opportunity: Wireless reduces costs

- Challenge: Power consumption

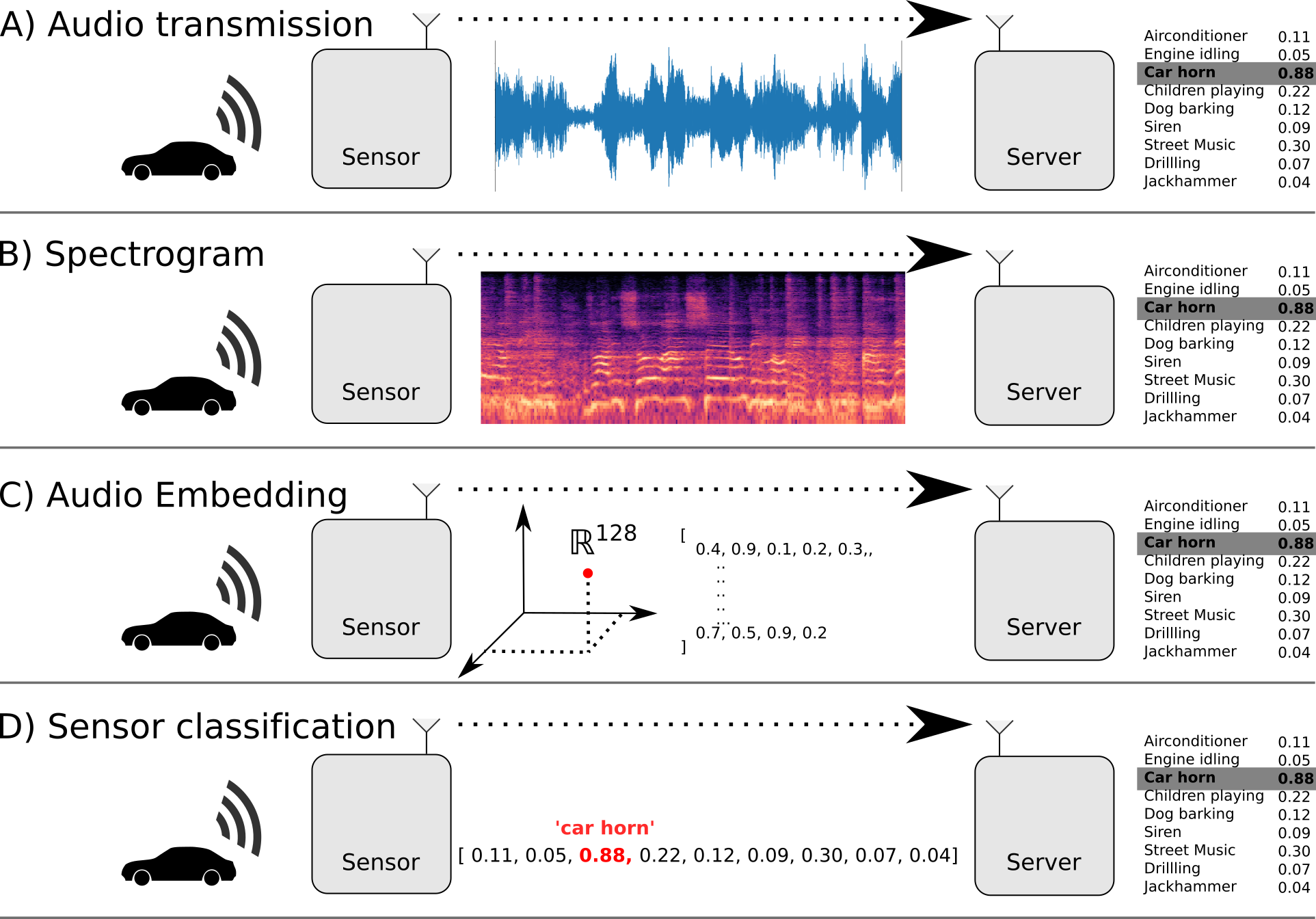

Sensor Network Architectures

Audio Machine Learning on low-power sensors

What do you mean by low-power?

Want: 1 year lifetime for palm-sized battery

Need: <1mW system power

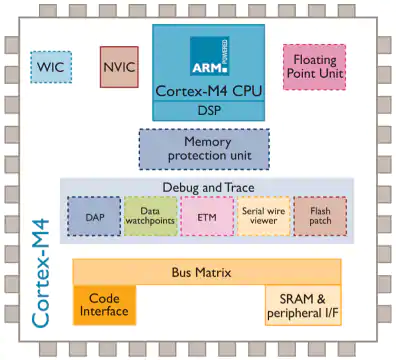

General purpose microcontroller

STM32L4 @ 80 MHz. Approx 10 mW.

- TensorFlow Lite for Microcontrollers (Google)

- ST X-CUBE-AI (ST Microelectronics)

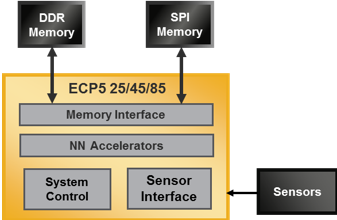

FPGA

Human presence detection. VGG8 on 64x64 RGB image, 5 FPS: 7 mW.

Audio ML approx 1 mW

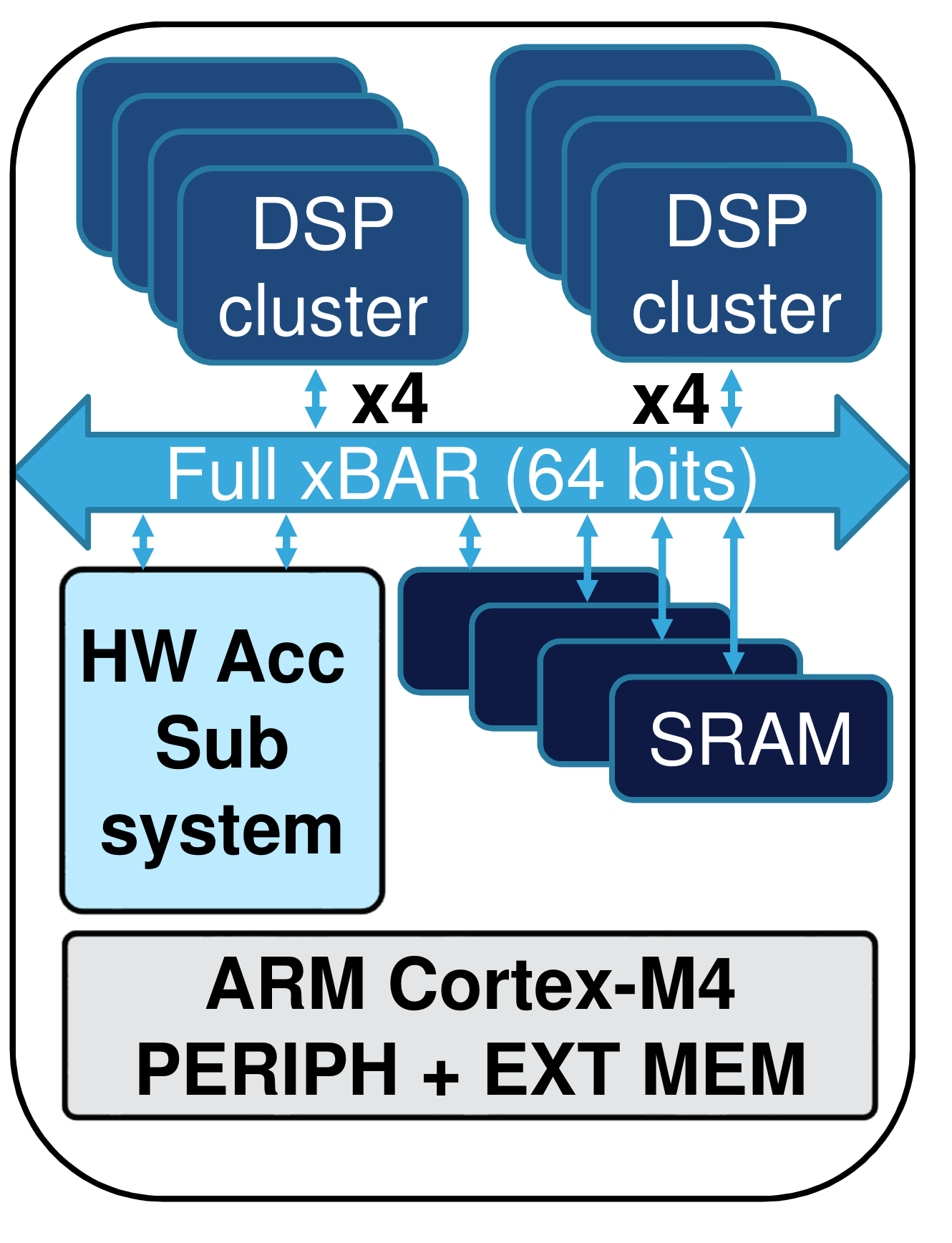

Neural Network co-processors

2.9 TOPS/W. AlexNet, 1000 classes, 10 FPS. 41 mWatt

Audio models probably < 1 mWatt.

On-edge Classification of Noise

Environmental Sound Classification

Given an audio signal of environmental sounds,

determine which class it belongs to

- Widely researched. 1000 hits on Google Scholar

- Datasets. Urbansound8k (10 classes), ESC-50, AudioSet (632 classes)

- 2017: Human-level performance on ESC-50

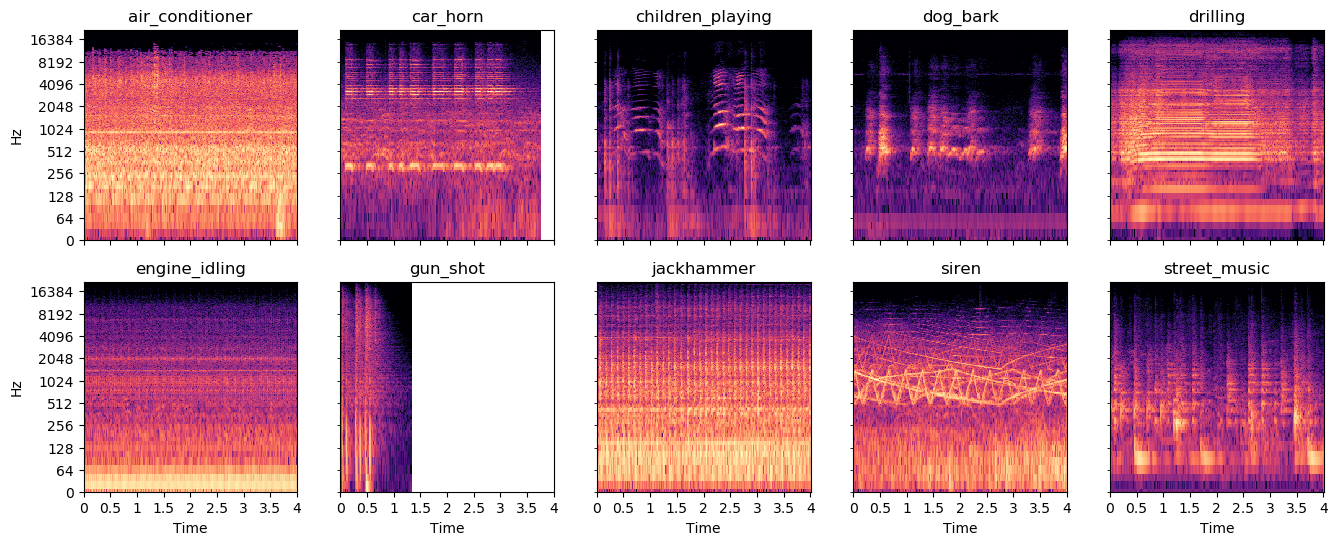

Urbansound8k

Existing work

- Convolutional Neural Networks dominate

- Techniques come from image classification

- Mel-spectrogram input standard

- End2end models: getting close in accuracy

- “Edge ML” focused on mobile-phone class HW

- “Tiny ML” (sensors) just starting

Model requirements

With 50% of STM32L476 capacity:

- 64 kB RAM

- 512 kB FLASH memory

- 4.5 M MACC/second

Existing models

eGRU: running on ARM Cortex-M0 microcontroller, accuracy 61% with non-standard evaluation

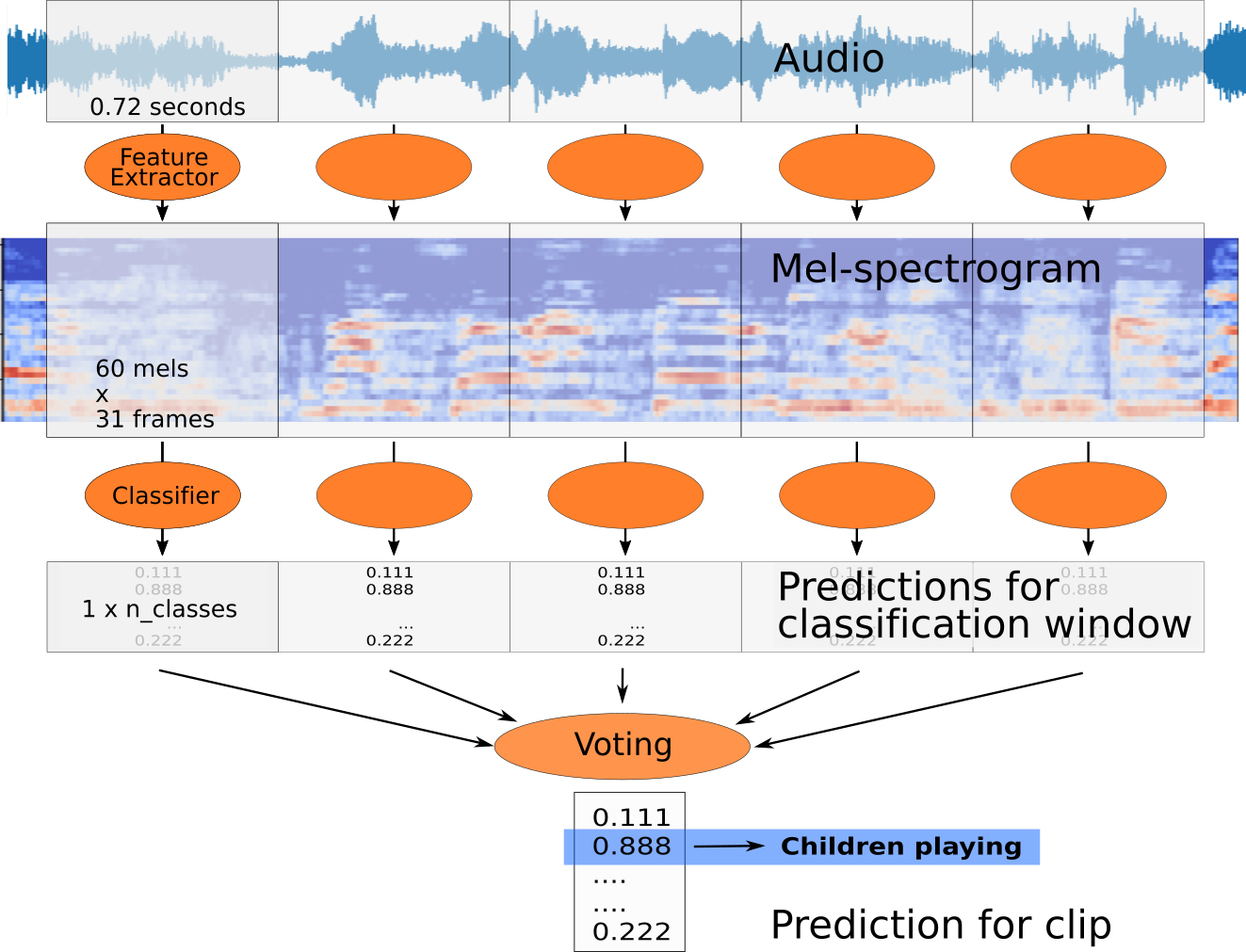

Pipeline

Models

Strategies for shrinking Convolutional Neural Network

Reduce input dimensionality

- Lower frequency range

- Lower frequency resolution

- Lower time duration in window

- Lower time resolution

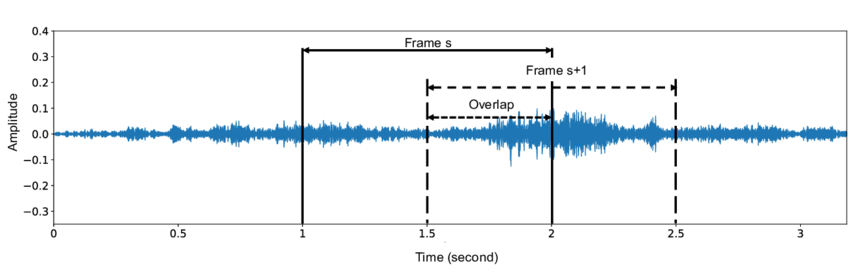

Reduce overlap

Models in literature use 95% overlap or more. 20x penalty in inference time!

Often low performance benefit. Use 0% (1x) or 50% (2x).

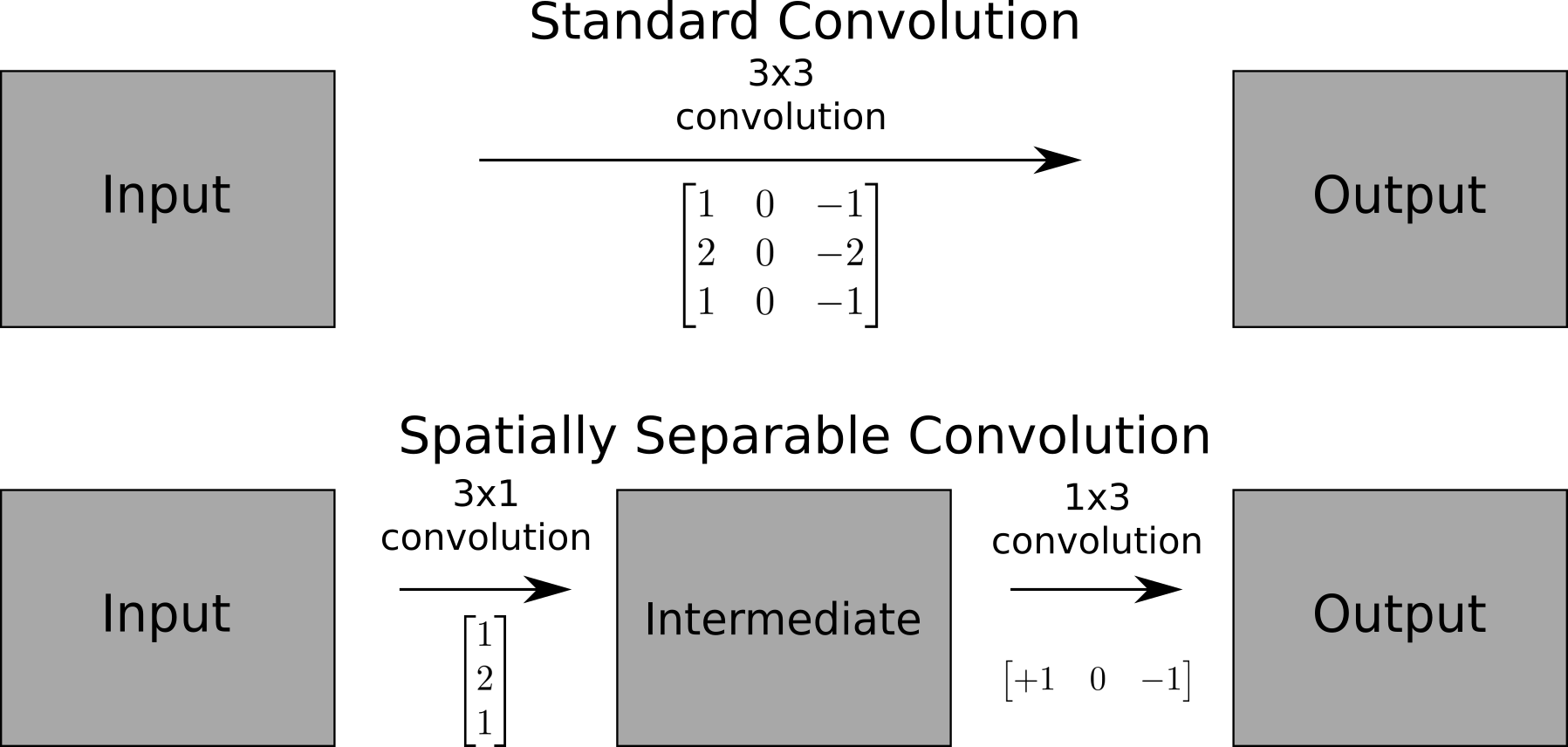

Depthwise-separable Convolution

MobileNet, “Hello Edge”, AclNet. 3x3 kernel,64 filters: 7.5x speedup

Spatially-separable Convolution

EffNet, LD-CNN. 5x5 kernel: 2.5x speedup

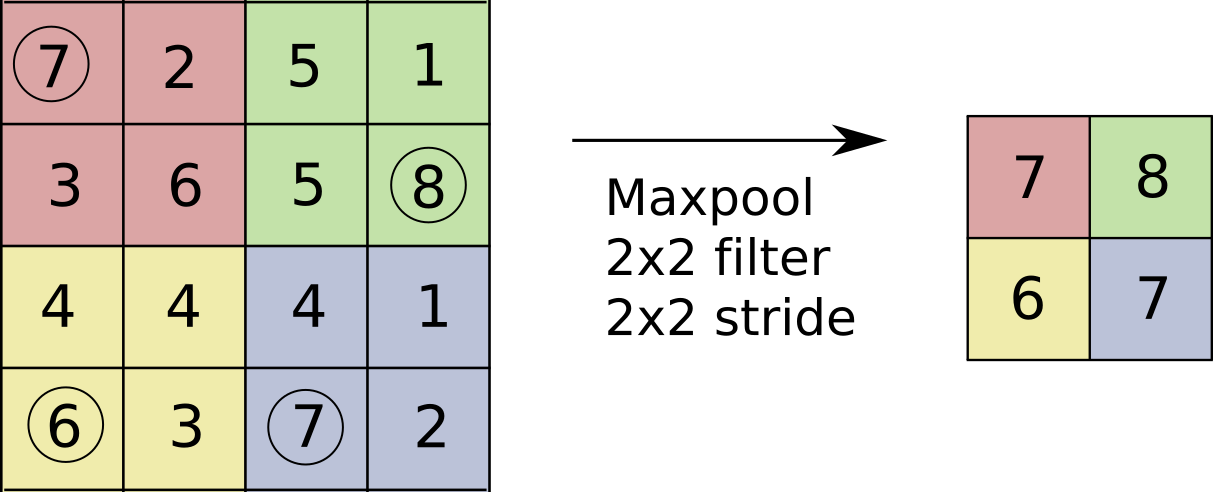

Downsampling using max-pooling

Wasteful? Computing convolutions, then throwing away 3/4 of results!

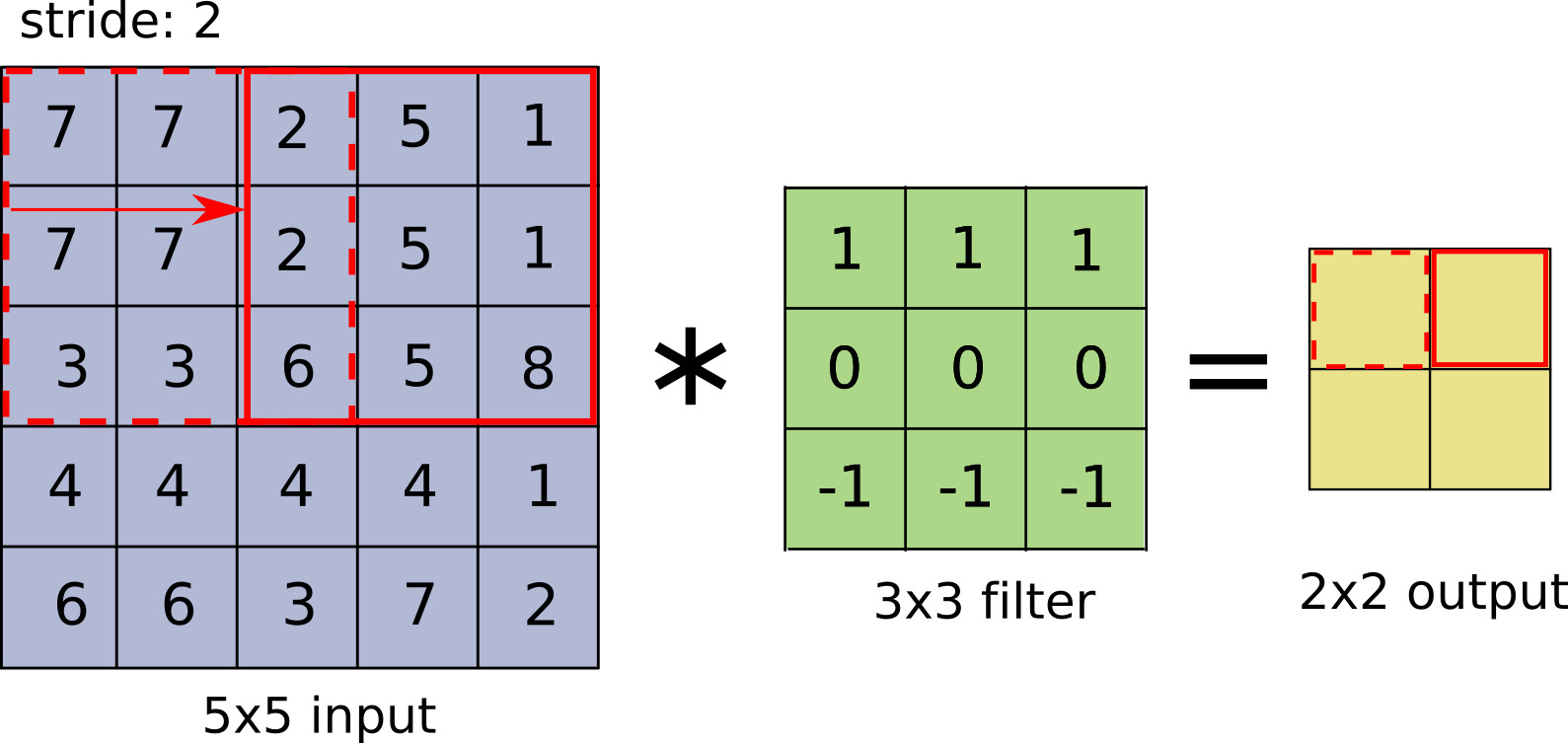

Downsampling using strided convolution

Striding means fewer computations and “learned” downsampling

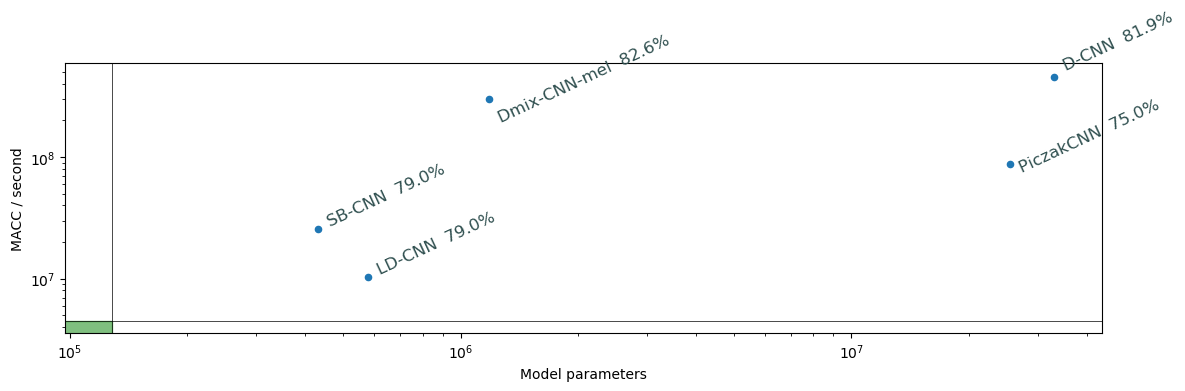

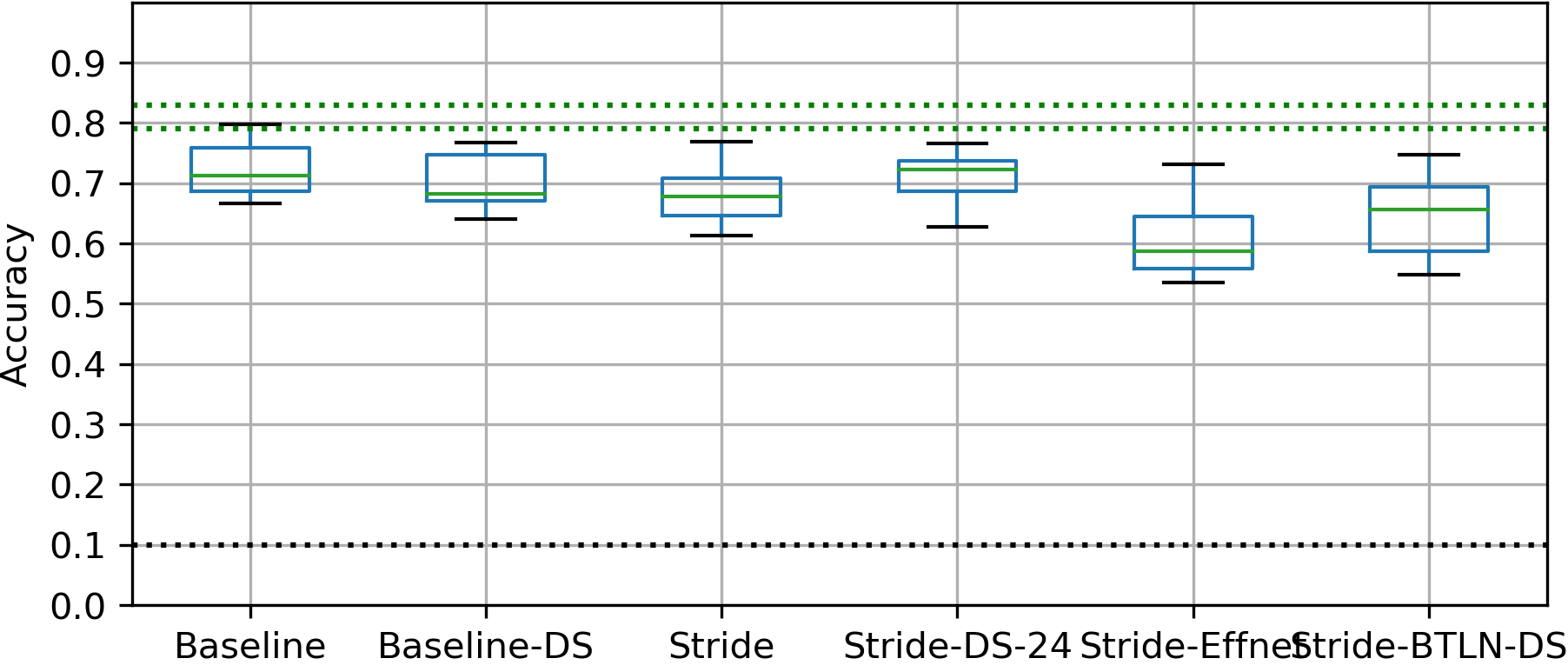

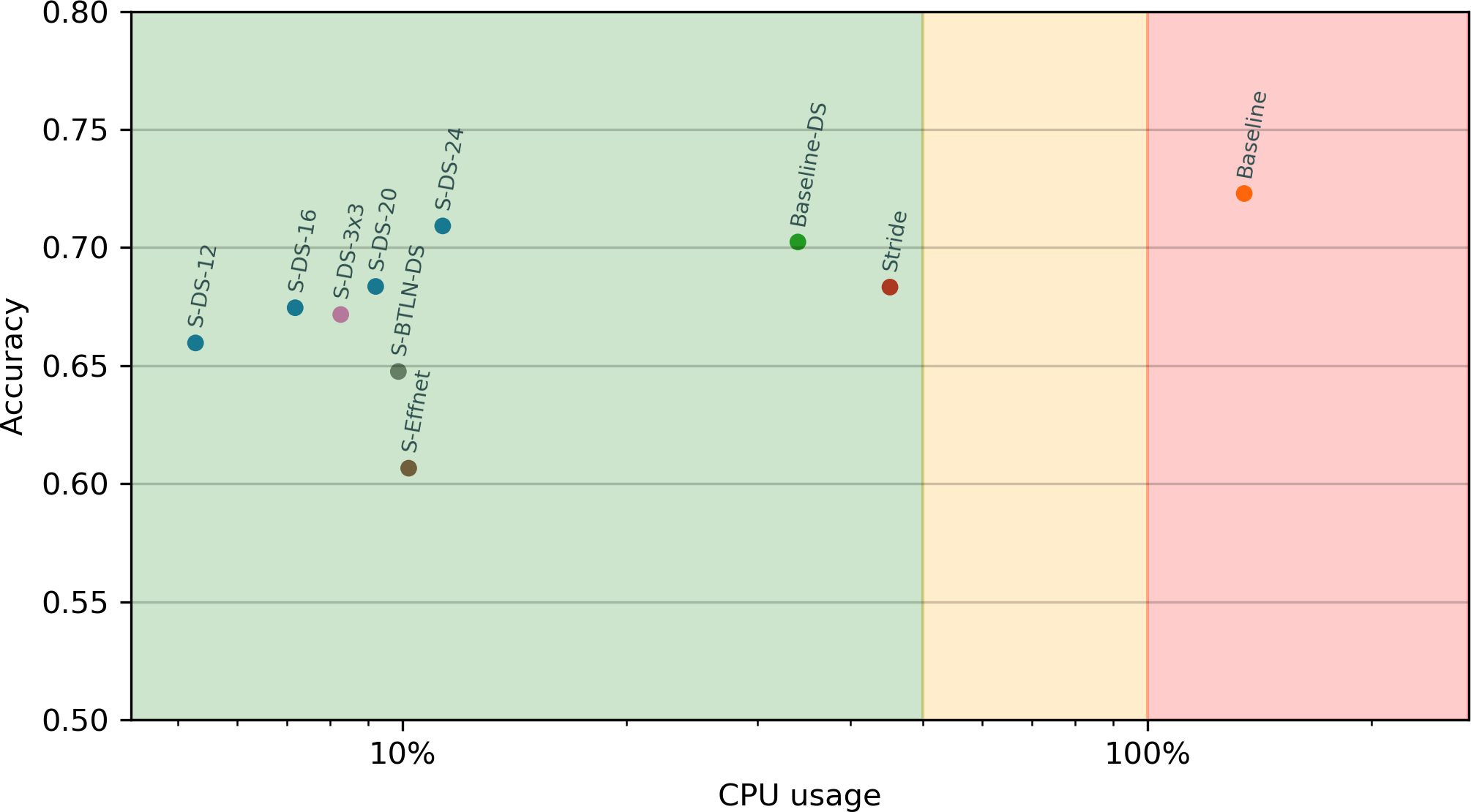

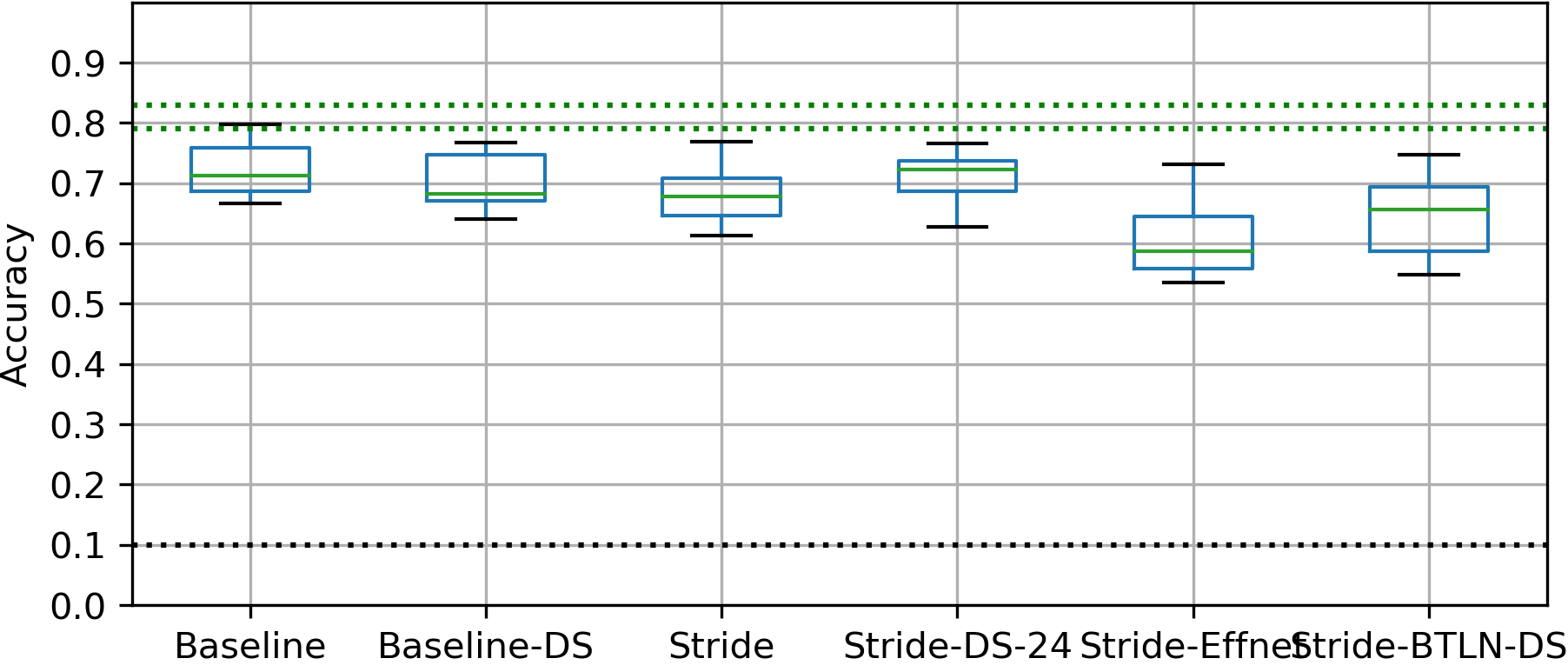

Model comparison

Performance vs compute

:::

- Performance of Strided-DS-24 similar to Baseline despite 12x the CPU use

- Suprising? Stride alone worse than Strided-DS-24

- Bottleneck and EffNet performed poorly

- Practical speedup not linear with MACC

:::

Quantization

Inference can often use 8 bit integers instead of 32 bit floats

- 1/4 the size for weights (FLASH) and activations (RAM)

- 8bit SIMD on ARM Cortex M4F: 1/4 the inference time

- Supported in X-CUBE-AI 4.x (July 2019)

Conclusions

- Able to perform Environmental Sound Classification at

~ 10mWpower, - Using general purpose microcontroller, ARM Cortex M4F

- Best performance: 70.9% mean accuracy, under 20% CPU load

- Highest reported Urbansound8k on microcontroller (over eGRU 62%)

- Best architecture: Depthwise-Separable convolutions with striding

- Quantization enables 4x bigger models (and higher perf)

- With dedicated Neural Network Hardware

Further Research

Waveform input to model

- Preprocessing. Mel-spectrogram: 60 milliseconds

- CNN. Stride-DS-24: 81 milliseconds

- With quantization, spectrogram conversion is the bottleneck!

- Convolutions can be used to learn a Time-Frequency transformation.

Can this be faster than the standard FFT? And still perform well?

On-sensor inference challenges

- Reducing power consumption. Adaptive sampling

- Efficient training data collection in WSN. Active Learning?

- Real-life performance evaluations. Out-of-domain samples

Wrapping up

Summary

- Noise pollution is a growing problem

- Wireless Sensor Networks can used to quantify

- Noise Classification can provide more information

- Want high density of sensors. Need to be low cost

- On-sensor classification desirable for power/cost and privacy

More resources

Machine Hearing. ML on Audio

Machine Learning for Embedded / IoT

Thesis Report & Code

Questions

?

Email: jon@soundsensing.no

Come talk to me!

- Noise Monitoring sensors. Pilot projects for 2020?

- Environmental Sound, Wireless Sensor Networks for Audio. Research partnering?

- “On-edge” / Embedded Device ML. Happy to advise!

Email: jon@soundsensing.no

Thesis results

Model comparison

List of results

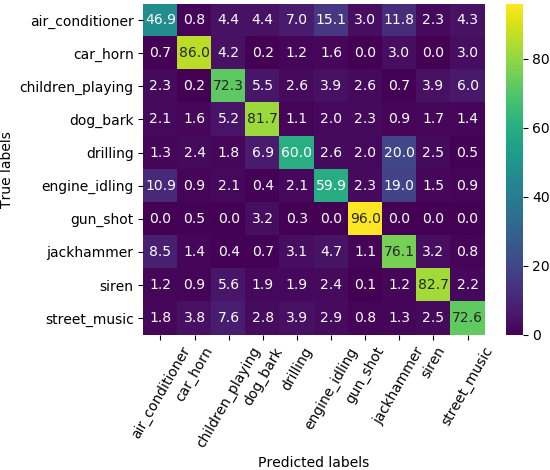

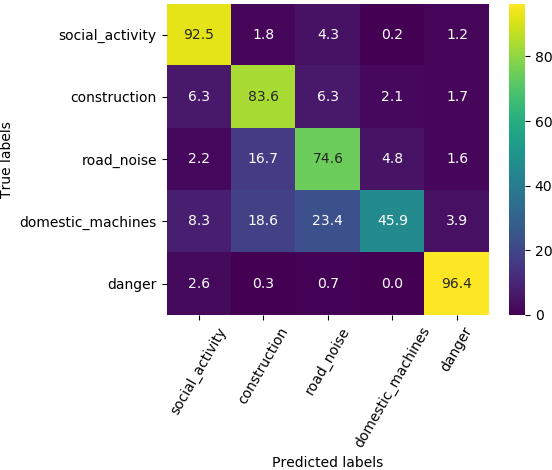

Confusion

Grouped classification

Foreground-only

Unknown class

Experimental Details

All models

Methods

Standard procedure for Urbansound8k

- Classification problem

- 4 second sound clips

- 10 classes

- 10-fold cross-validation, predefined

- Metric: Accuracy

Training settings

Training

- NVidia RTX2060 GPU 6 GB

- 10 models x 10 folds = 100 training jobs

- 100 epochs

- 3 jobs in parallel

- 36 hours total

Evaluation

For each fold of each model

- Select best model based on validation accuracy

- Calculate accuracy on test set

For each model

- Measure CPU time on device

Your model will trick you

And the bugs can be hard to spot

FAIL: Integer truncation

FAIL. Dropout location

Background

Mel-spectrogram

Noise Pollution

Reduces health due to stress and loss of sleep

In Norway

- 1.9 million affected by road noise (2014, SSB)

- 10’000 healty years lost per year (Folkehelseinstituttet)

In Europe

- 13 million suffering from sleep disturbance (EEA)

- 900’000 DALY lost (WHO)

Noise Mapping

Simulation only, no direct measurements